Running Open-Source Generative AI Models on Replicate

A brief on running open-source text, code, image, and music generation models on Replicate.

Since the launch of Llama by Meta, open source generative artificial intelligence (AI) models have gained significant attention due to their ability to generate interesting outputs, such as images, music, and text. These models have been made available under open source licenses, allowing researchers and developers to access, modify, and distribute the code freely. This has led to a further surge in development and hosting of open source models, with many downstream benefits. In this blog post, we will explore how to run open source generative AI models on Replicate, a budding model hosting platform.

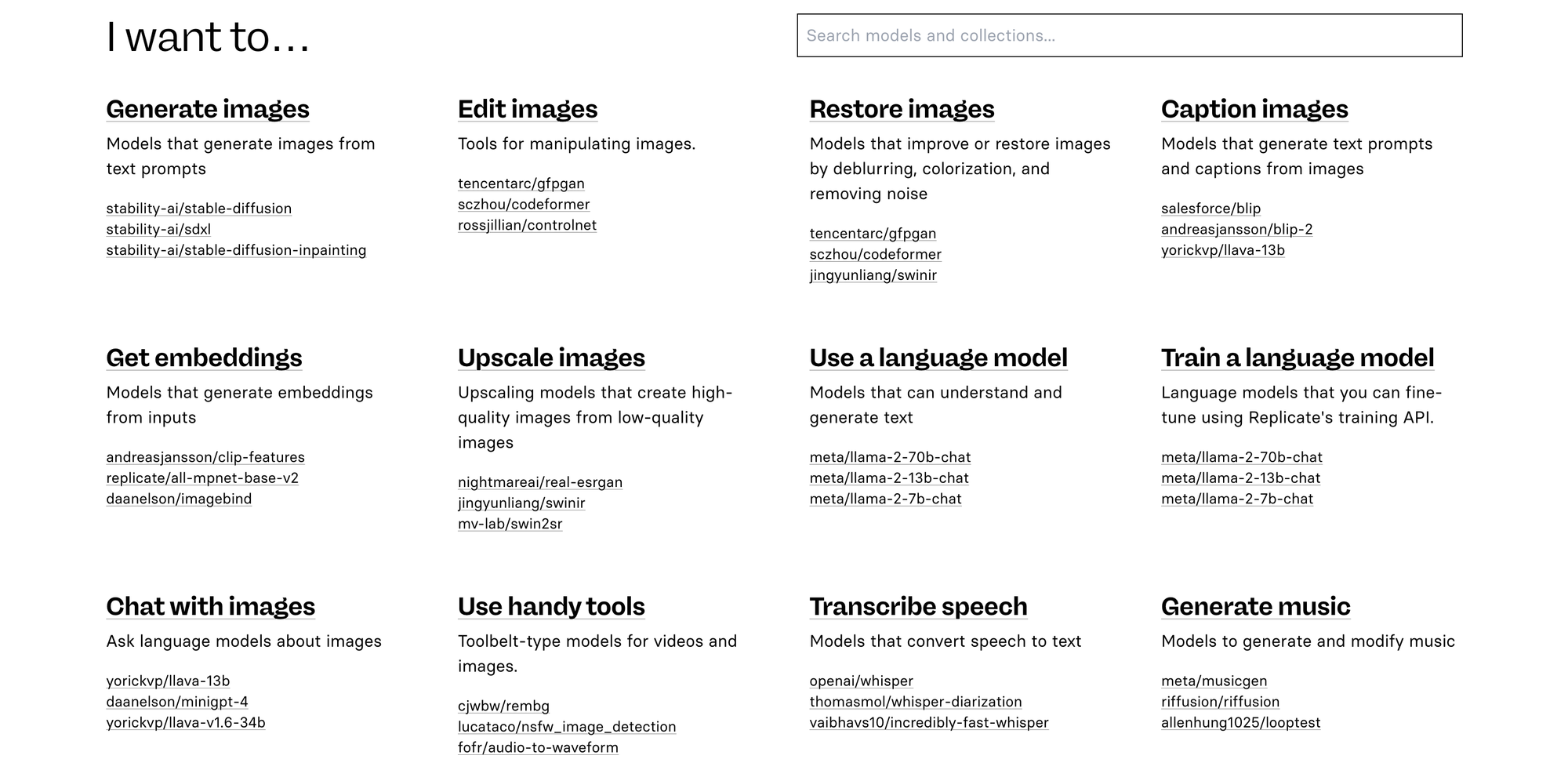

What is Replicate?

Replicate is a relatively new platform for hosting and running machine learning (ML) models with a cloud API, without having to manage your own infrastructure. While you can run private ML models too, Replicate has been in the spotlight for making it super easy to run public, open-source models like Meta Llama, Mistral, Stable Diffusion, and others. When you use public models, you only pay for the time it's active processing your requests. Sign up for an account at Replicate and get an API key. I'll use Python code samples published by Replicate to build a simple model playground using Streamlit. The models used in my app below are maintained by Replicate, but there are plenty others for you to explore.

Generate Text using Meta Llama 3 70B Instruct Model

Meta Llama 3 70B Instruct is a 70 billion parameter language model from Meta, pre-trained and fine-tuned for chat completions. It has a context window of 8000 tokens, double that of Llama 2. You can modify the system prompt to guide model responses. The model is currently priced at $0.65 / 1M input tokens, and $2.75 / 1M output tokens.

Here's sample Python code to run the meta/llama-3-70b-instruct model using Replicate's API - you'll find the code for my Streamlit app here.

# Run meta/meta-llama-3-70b-instruct model on Replicate

output = replicate.run(

"meta/meta-llama-3-70b-instruct",

input={

"debug": False,

"top_k": 0,

"top_p": 0.9,

"prompt": prompt,

"temperature": 0.6,

"system_prompt": "You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.\n\nIf a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.",

"max_new_tokens": 500,

"min_new_tokens": -1

},

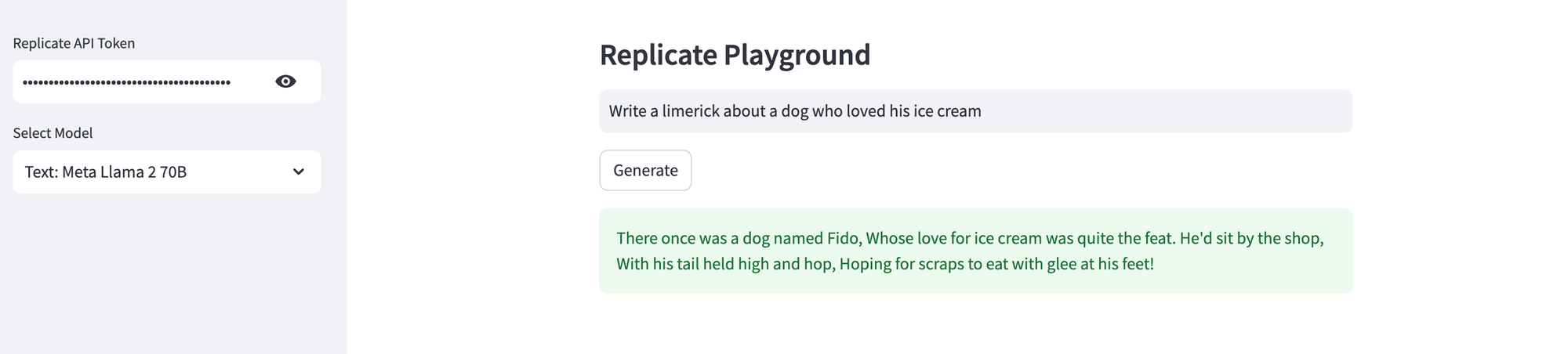

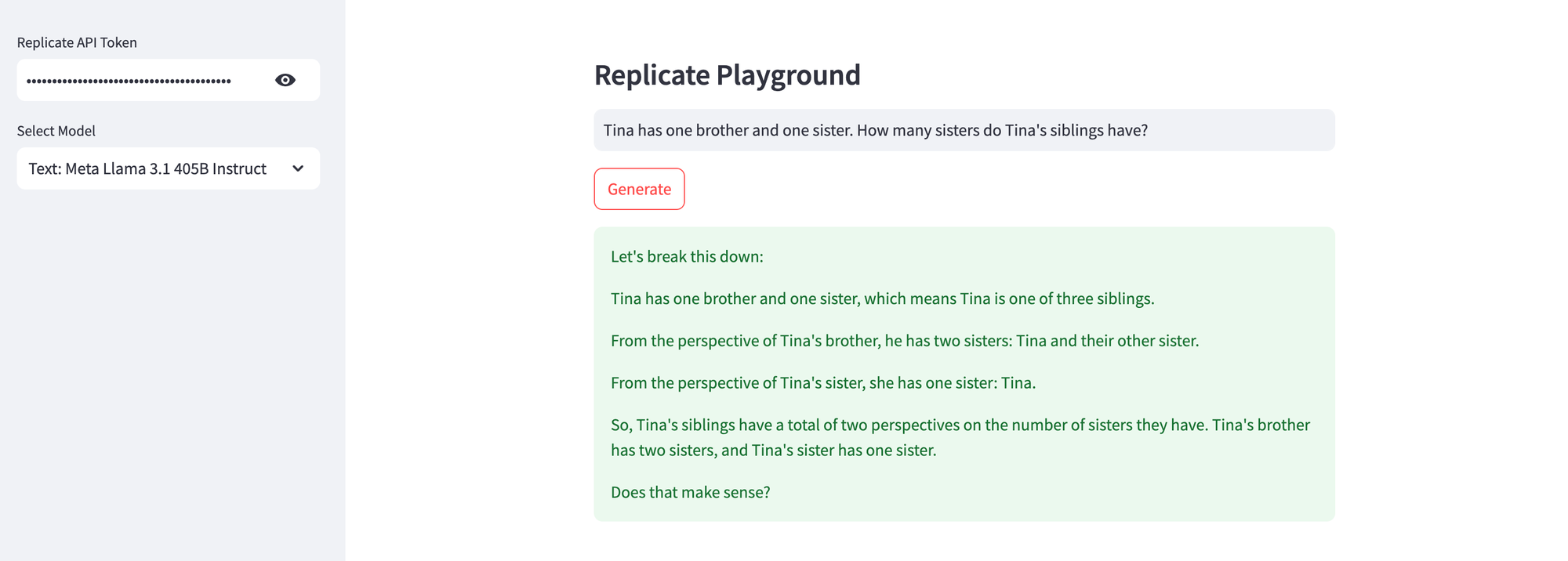

)Reason using Meta Llama 3.1 405B Instruct Model

Meta Llama 3.1 405B Instruct is a just-released 405 billion parameter multi-lingual language model from Meta, pre-trained on ~15T+ tokens, and fine-tuned for chat completions. It was trained on 8 languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai. It has a context window of 8000 tokens; you can also modify the system prompt to guide model responses. The model is currently priced at $9.50 / 1M input or output tokens.

Here's sample Python code to run the meta/llama-3.1-405b-instruct model using Replicate's API - you'll find the code for my Streamlit app here.

# Run meta/meta-llama-3.1-405b-instruct model on Replicate

output = replicate.run(

"meta/meta-llama-3.1-405b-instruct",

input={

"debug": False,

"top_k": 50,

"top_p": 0.9,

"prompt": prompt,

"temperature": 0.6,

"system_prompt": "You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.\n\nIf a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.",

"max_tokens": 1024,

"min_tokens": 0

},

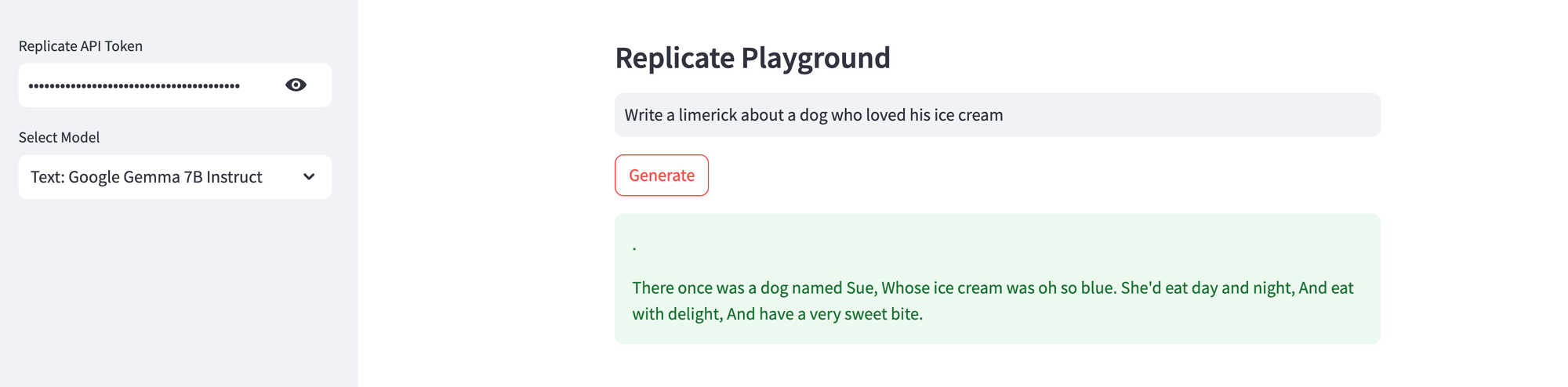

)Generate Text using Google Gemma 7B Instruct Model

Google Gemma 7B Instruct is a 7 billion parameter instruction-tuned language model from Google, built from the same research and technology used to create the larger, more powerful Gemini models. The model runs on NVIDIA A40 (Large) GPU hardware; each prediction typically completes within 6 seconds and costs $0.000725/sec ($2.61/hr).

Here's sample Python code to run the google-deepmind/gemma-7b-it model using Replicate's API - you'll find the code for my Streamlit app here.

# Run google-deepmind/gemma-7b model on Replicate

output = replicate.run(

"google-deepmind/gemma-7b-it:2790a695e5dcae15506138cc4718d1106d0d475e6dca4b1d43f42414647993d5",

input={

"top_k": 50,

"top_p": 0.95,

"prompt": prompt,

"temperature": 0.7,

"max_new_tokens": 512,

"min_new_tokens": -1,

"repetition_penalty": 1

},

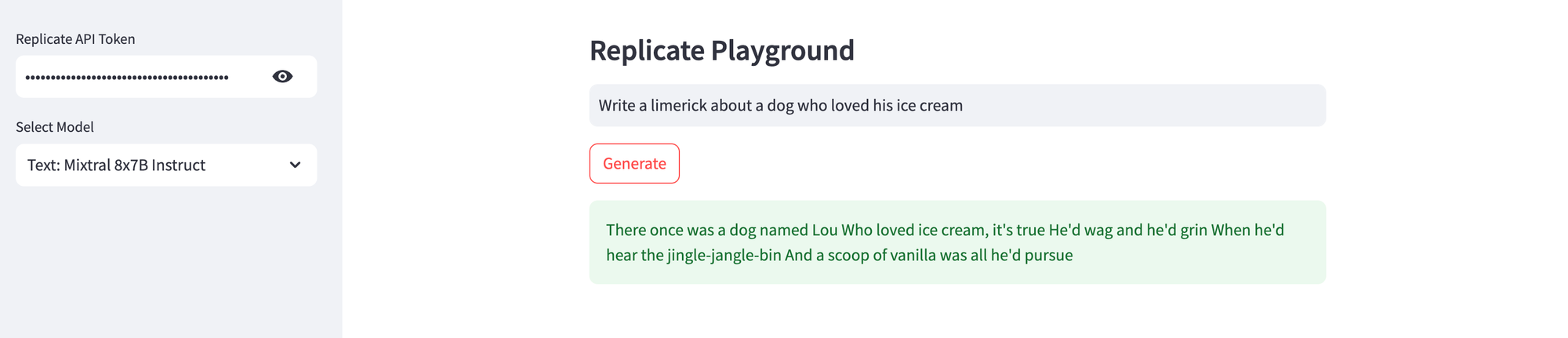

)Generate Text using Mixtral 8x7B Instruct Model

Mixtral 8x7B Instruct model is a pretrained generative Sparse Mixture of Experts tuned to be a helpful assistant. The model is currently priced at $0.30/1M input tokens, and $1.00/1M output tokens.

Here's sample Python code to run the mistralai/mixtral-8x7b-instruct-v0.1 model using Replicate's API - you'll find the code for my Streamlit app here.

# Run mistralai/mixtral-8x7b-instruct-v0.1 model on Replicate

output = replicate.run(

"mistralai/mixtral-8x7b-instruct-v0.1",

input={

"top_k": 50,

"top_p": 0.9,

"prompt": prompt,

"temperature": 0.6,

"max_new_tokens": 1024,

"prompt_template": "<s>[INST] {prompt} [/INST] ",

"presence_penalty": 0,

"frequency_penalty": 0

},

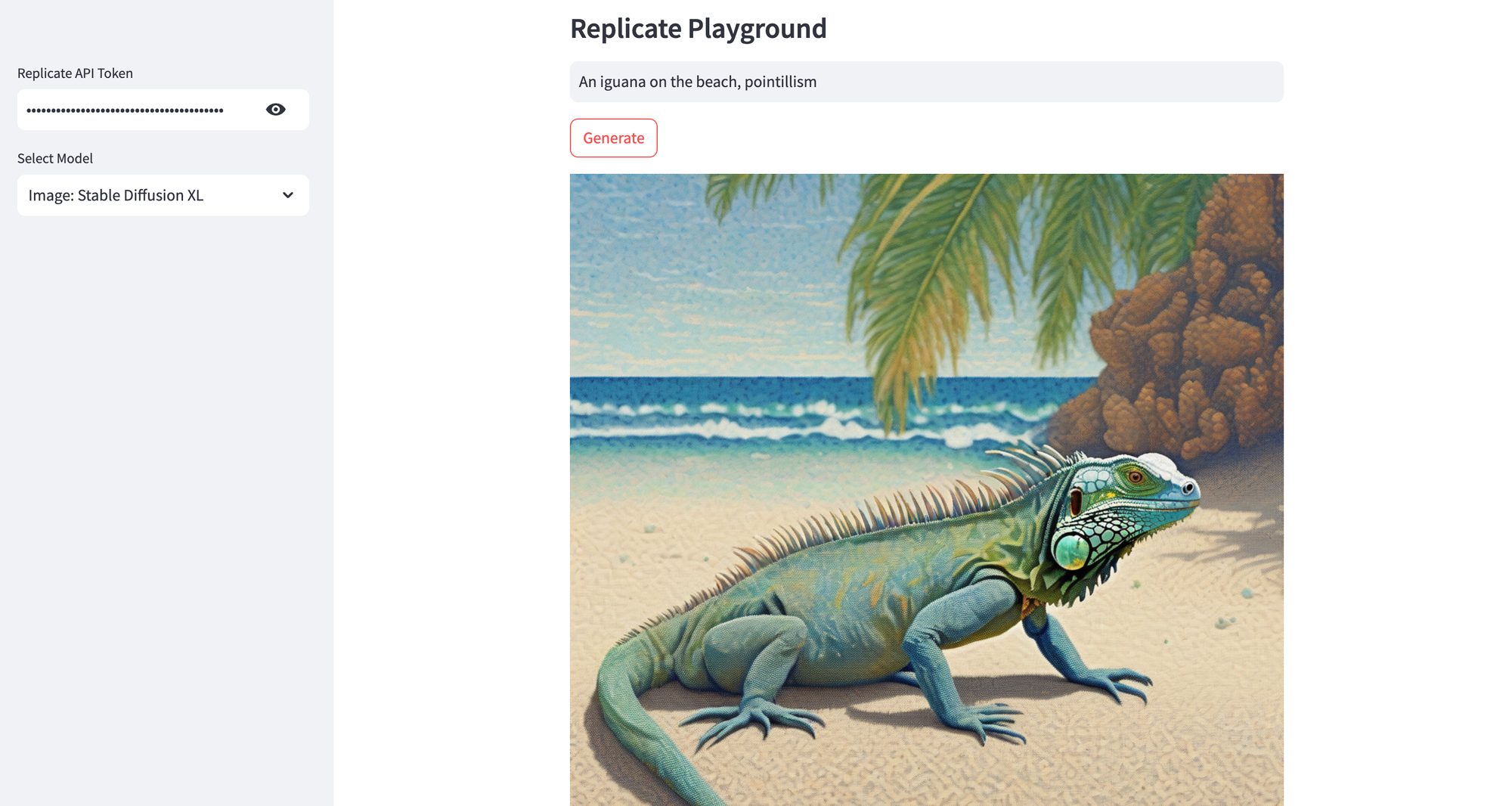

)Generate Image using Stable Diffusion 3 Model

Stable Diffusion 3 is a diffusion-based text-to-image generative AI model that creates beautiful images. It excels at photorealism, typography and prompt following. You can apply a watermark, and enable a safety check for generated images. The model is priced by the number of images generated; each image generation costs $0.035.

Here's sample Python code to run the stability-ai/stable-diffusion-3 model using Replicate's API - you'll find the code for my Streamlit app here.

# Run stability-ai/stable-diffusion-3 image model on Replicate

output = replicate.run(

"stability-ai/stable-diffusion-3",

input={

"prompt": prompt,

"aspect_ratio": "3:2"

}

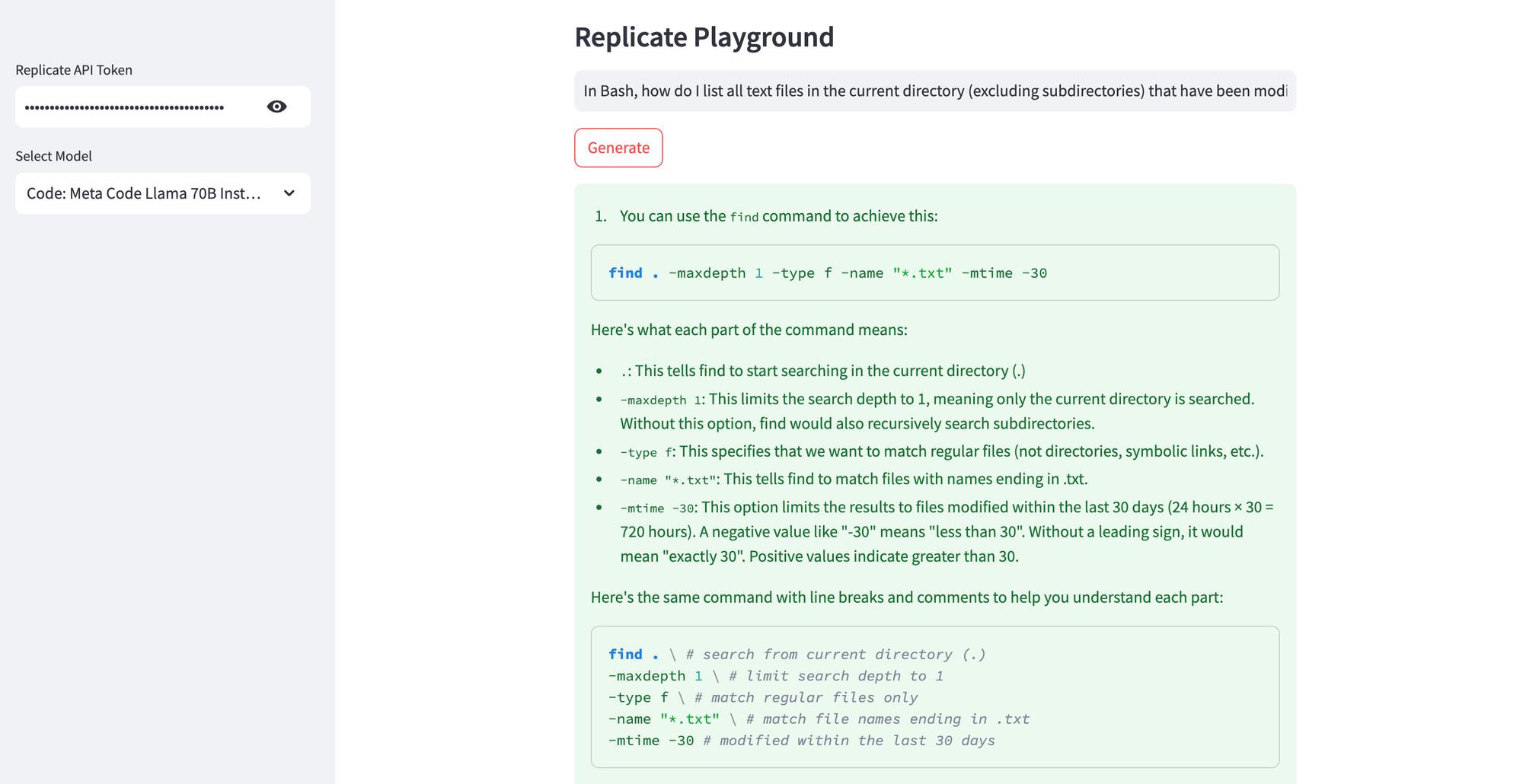

)Generate Code using Meta Code Llama 70B Instruct Model

Meta Code Llama 70B Instruct is a 70 billion parameter language model from Meta, pre-trained and fine-tuned for coding and conversation. The model runs on NVIDIA A100 (80GB) GPU hardware; each prediction typically completes within 22 seconds and costs $0.0014/sec ($5.04/hr).

Here's sample Python code to run the meta/codellama-70b-instruct model using Replicate's API - you'll find the code for my Streamlit app here.

# Run meta/codellama-70b-instruct model on Replicate

output = replicate.run(

"meta/codellama-70b-instruct:a279116fe47a0f65701a8817188601e2fe8f4b9e04a518789655ea7b995851bf",

input={

"top_k": 10,

"top_p": 0.95,

"prompt": prompt,

"max_tokens": 500,

"temperature": 0.8,

"system_prompt": "",

"repeat_penalty": 1.1,

"presence_penalty": 0,

"frequency_penalty": 0

}

)Convert Text to Audio using Suno AI Bark Model

Suno AI Bark is a transformer-based text-to-audio model created by Suno. Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model runs on Nvidia T4 GPU hardware; each prediction typically completes within 42 seconds and the costs vary based on the inputs.

Here's sample Python code to run the suno-ai/bark model using Replicate's API - you'll find the code for my Streamlit app here.

# Run suno-ai/bark model on Replicate

output = replicate.run(

"suno-ai/bark:b76242b40d67c76ab6742e987628a2a9ac019e11d56ab96c4e91ce03b79b2787",

input={

"prompt": prompt,

"text_temp": 0.7,

"output_full": False,

"waveform_temp": 0.7,

"history_prompt": "announcer"

}

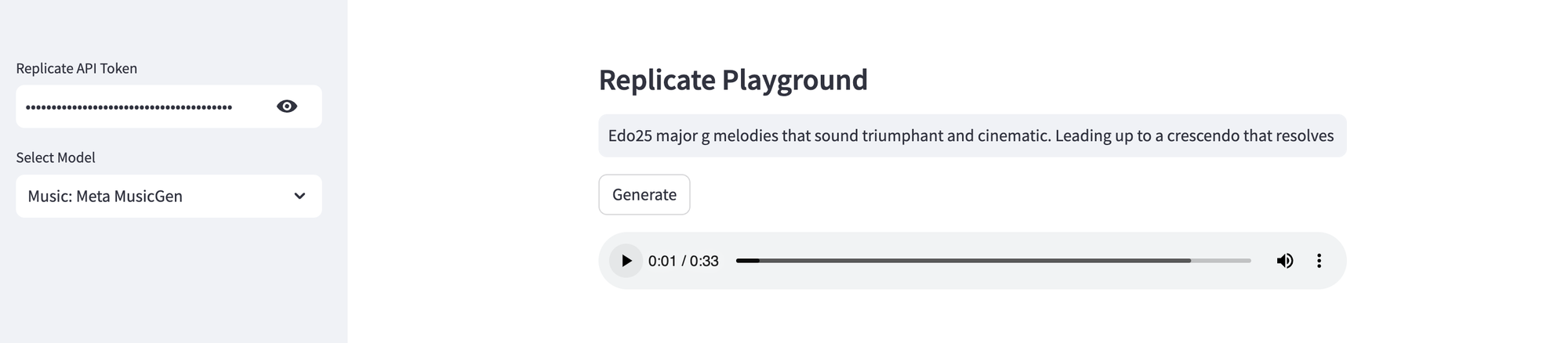

)Generate Music using Meta MusicGen Model

Meta MusicGen is a generative AI model from Meta, pre-trained to generate music from a prompt or melody. The model runs on NVIDIA A100 (40GB) GPU hardware; each prediction typically completes within 53 seconds and costs $0.00115/sec ($4.14/hr).

Here's sample Python code to run the meta/musicgen model using Replicate's API - you'll find the code for my Streamlit app here.

# Run meta/musicgen model on Replicate

output = replicate.run(

"meta/musicgen:671ac645ce5e552cc63a54a2bbff63fcf798043055d2dac5fc9e36a837eedcfb",

input={

"top_k": 250,

"top_p": 0,

"prompt": prompt,

"duration": 33,

"temperature": 1,

"continuation": False,

"model_version": "stereo-large",

"output_format": "mp3",

"continuation_start": 0,

"multi_band_diffusion": False,

"normalization_strategy": "peak",

"classifier_free_guidance": 3

}

)Deploy the Streamlit App on Railway

To test these models, let's deploy the Streamlit app on Railway, a modern app hosting platform. If you don't already have an account, sign up using GitHub, and click Authorize Railway App when redirected. Review and agree to Railway's Terms of Service and Fair Use Policy if prompted. Launch the Replicate one-click starter template (or click the button below) to deploy it instantly on Railway.

Review the settings and click Deploy; the deployment will kick off immediately. Once the deployment completes, the Streamlit app will be available at a default xxx.up.railway.app domain - launch this URL to access the web interface. If you are interested in setting up a custom domain, I covered it at length in a previous post - see the final section here.