LLM Memory Management with Motörhead

A brief guide to Motörhead, an open-source memory and information retrieval server for large language models.

Often, large language models (LLMs) and chat models are stateless; they respond to user queries based on the context provided in the input, but do not really persist information over a sufficiently long period of time. For chatbots, this is critical, both for a coherent chat experience and for ensuring that the chatbot retains context during the conversation. For other applications, it may be necessary to store relevant information even longer. In this post, we'll explore LLM memory management using Motörhead, and how LangChain helps to abstract and integrate Motörhead capabilities into chains and agents.

What is Motörhead?

LangChain is an open-source framework created to aid the development of applications leveraging the power of large language models (LLMs). It can be used for chatbots, text summarisation, data generation, code understanding, question answering, evaluation, and more. Motörhead, on the other hand, is an open-source memory and information retrieval server for LLMs. It allows you to persist chat messages and other pieces of information to "memory" for a much longer period than the duration of a chat conversation. At its essence, Motörhead allows you to store messages, retrieve stored messages, and delete stored messages.

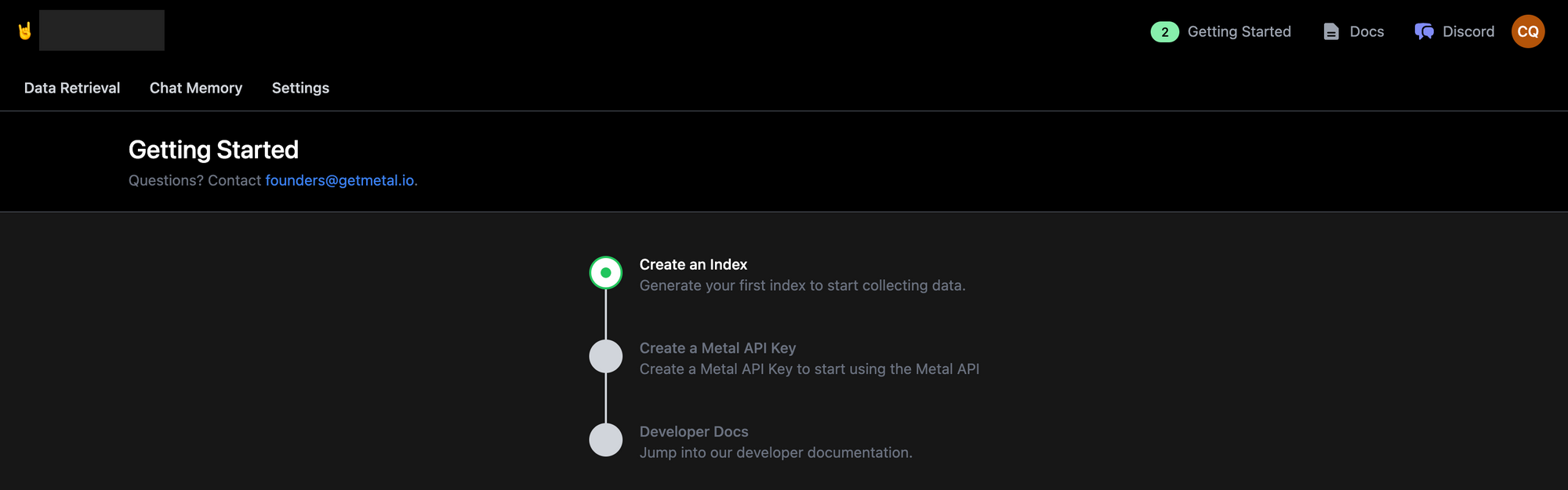

Metal, the company behind Motörhead, offers a fully managed, cloud service for LLM memory management too. You can sign up with a Google account to access an easy-to-use web interface - their free plan is sufficient to get started.

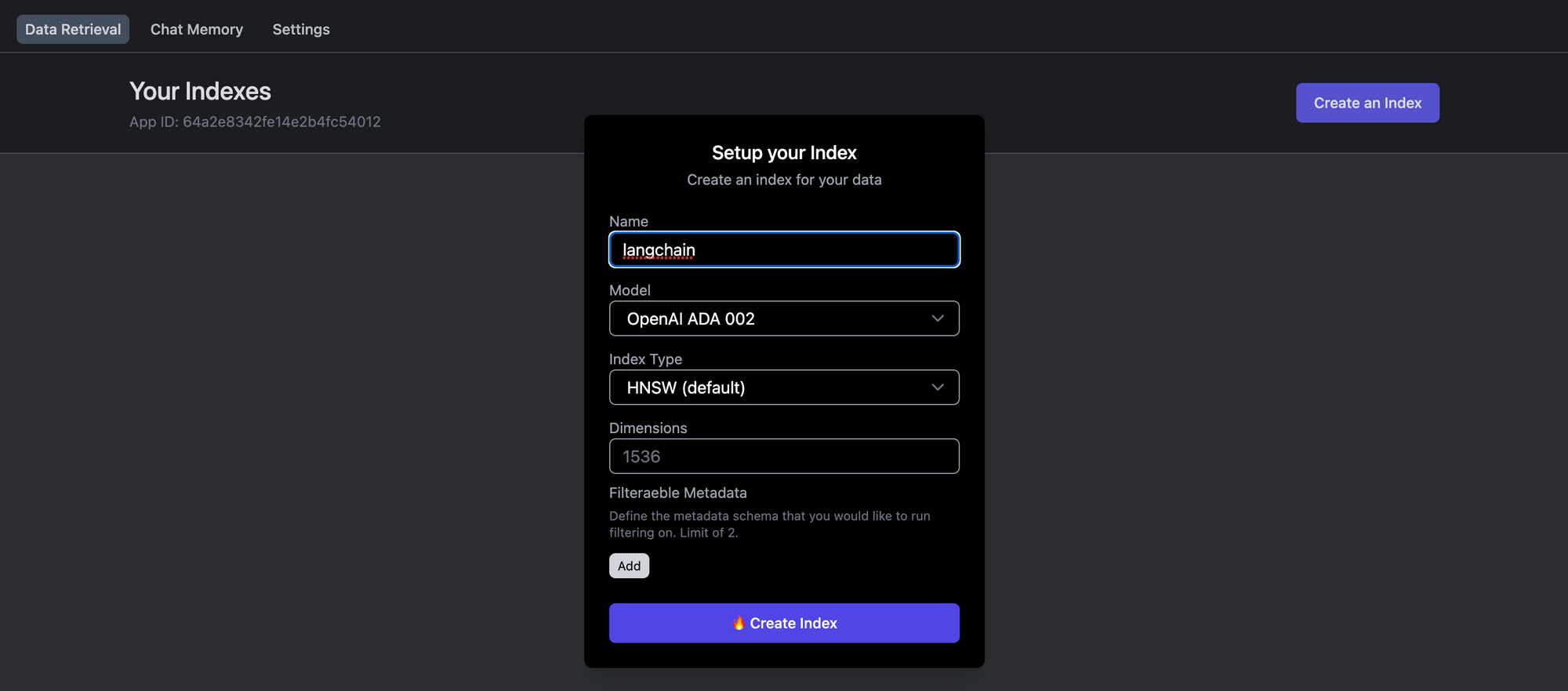

From the Data Retrieval tab, create an index, specify the model and index type, and click Create Index. Once the index is ready, you can import files, add text or images to create embeddings in the vector database.

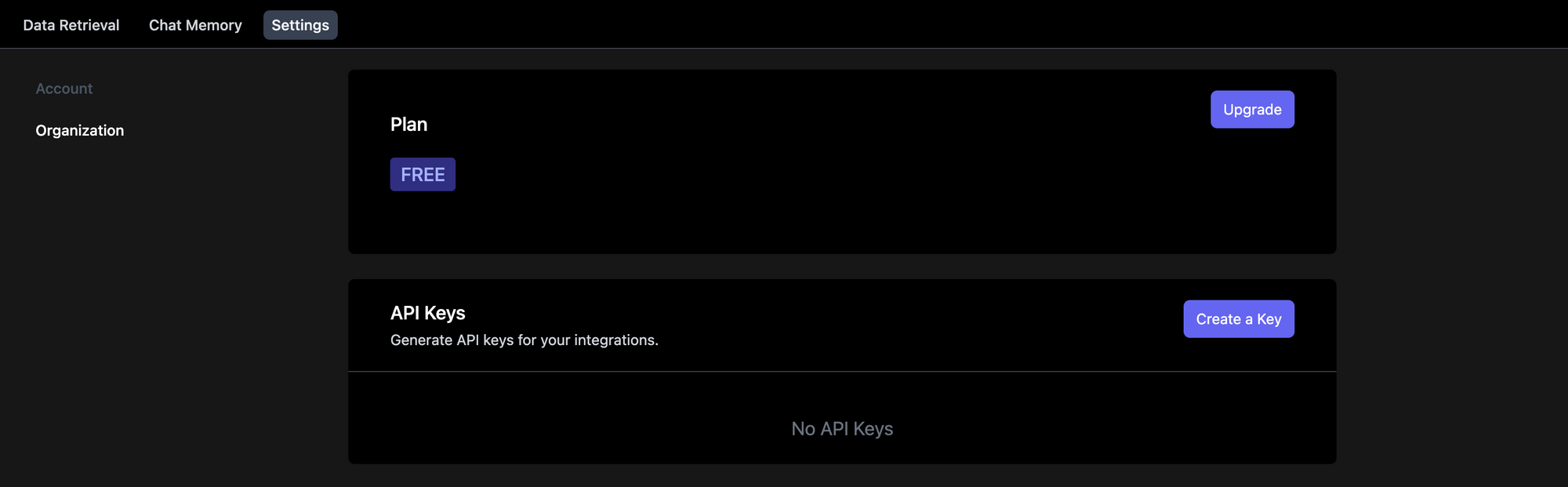

If you use this instance to store chat message history, you can see the conversations under the Chat Memory tab. We'll explore both the managed and self-hosted options below, so create an API key and note the client ID as well.

For the self-hosted option, we'll use Railway to deploy a Motörhead instance.

Deploy the Motörhead Server on Railway

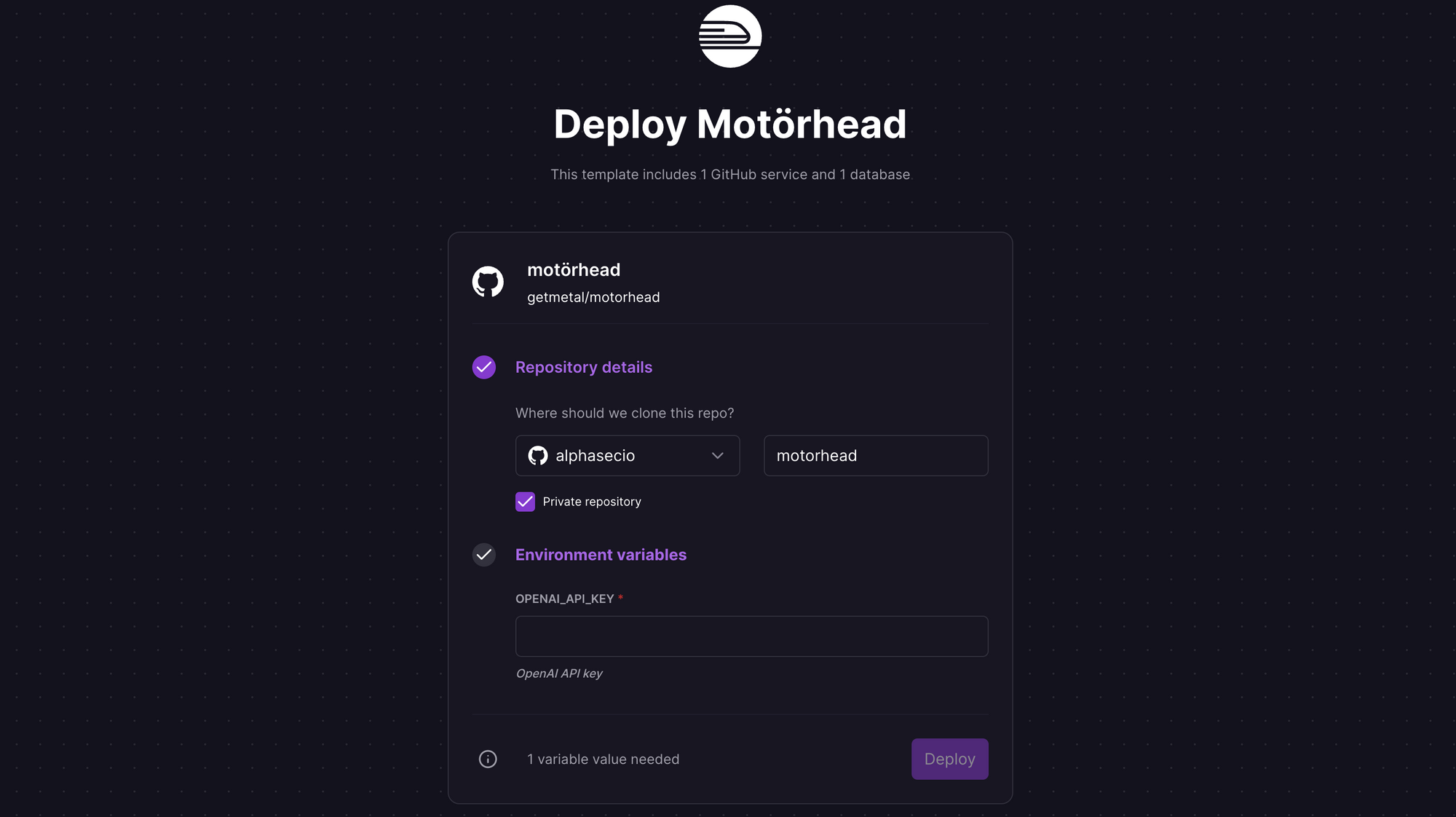

Railway is a modern app hosting platform that makes it easy to deploy production-ready apps quickly. Sign up for an account using GitHub, and click Authorize Railway App when redirected. Review and agree to Railway's Terms of Service and Fair Use Policy if prompted. Railway does not offer an always-free plan anymore, but the free trial is good enough to try this. Launch the Motörhead one-click starter template (or click the button below) to deploy the app instantly on Railway.

You'll be given an opportunity to change the default repository name and set it private, if you'd like. Provide the OPENAI_API_KEY variable value, and click Deploy; the deployment will kick off immediately. A Motörhead deployment also requires the REDIS_URL variable, but this is automatically configured at runtime by the Railway template, which provisions a Redis instance simultaneously.

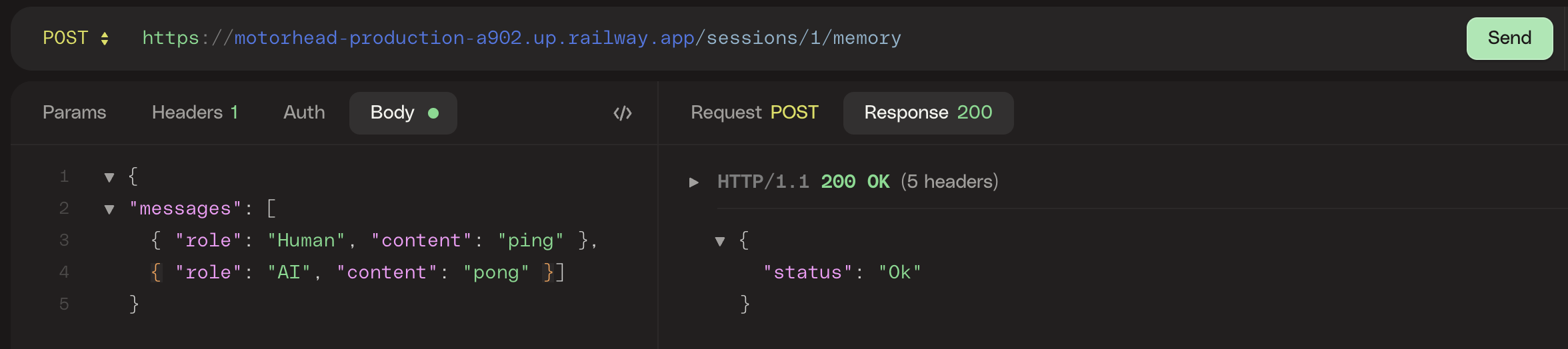

Once the deployment completes, the Motörhead server will be available at a default xxx.up.railway.app domain. If you are interested in setting up a custom domain, I covered it at length in a previous post - see the final section here. To test that the Motörhead server has been deployed properly, make a simple POST request to the sessions/[SESSION_ID]/memory endpoint using HTTPie. The session is automatically created if it did not previously exist.

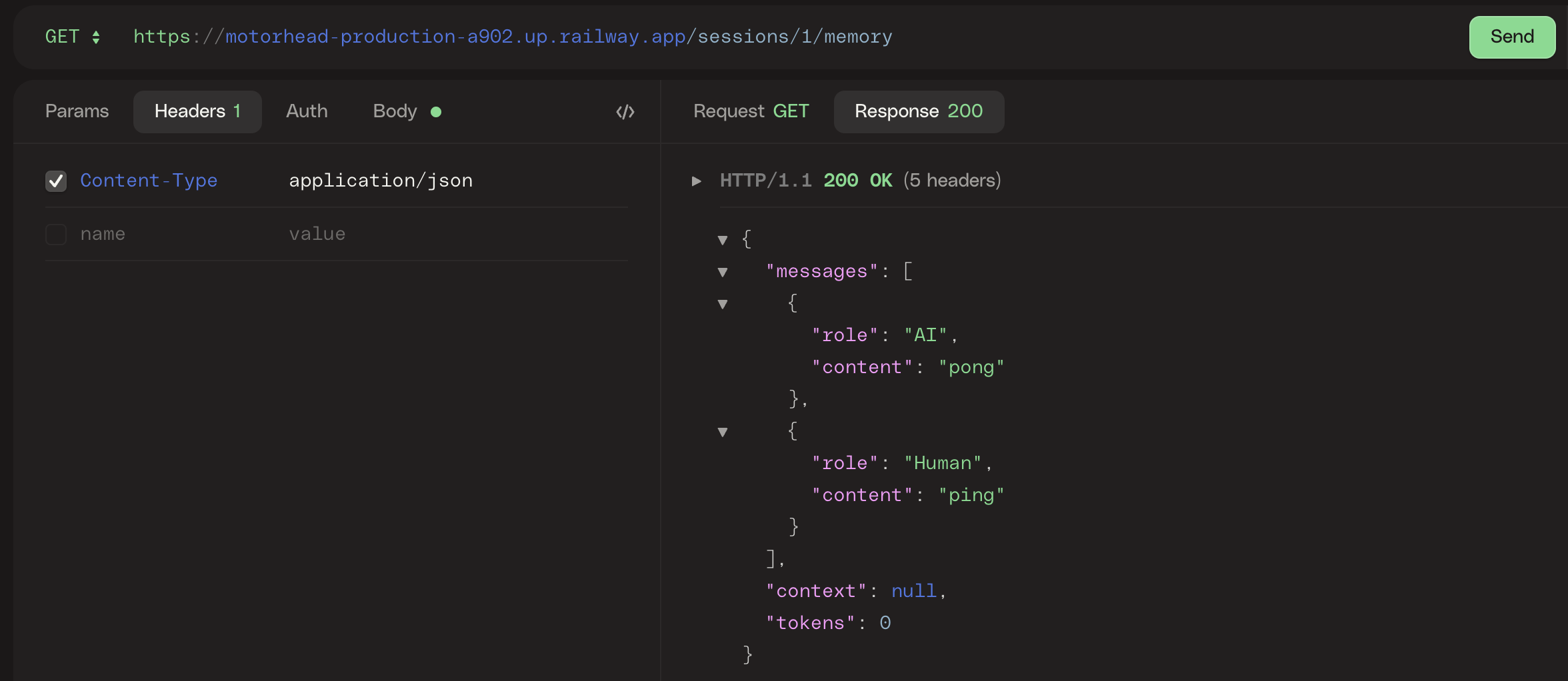

If the POST request is successful, make a GET request to the same endpoint and session to verify that the data was stored properly.

LLM Memory Management with Motörhead

To test LLM memory management i.e. store and retrieve user and AI chat messages, we'll use the LangChain MotorheadMemory class, along with a PromptTemplate and LLMChain chain. Here's an excerpt from a Jupyter notebook that I created to play with Motörhead - you can find this (and other memory management examples) in my GitHub repo for the LangChain Decoded series. Note that this is just proof-of-concept code; it has not been developed or optimized for production usage.

# Memory management using Motorhead (managed)

from langchain import OpenAI, LLMChain, PromptTemplate

from langchain.memory.motorhead_memory import MotorheadMemory

template = """You are a chatbot having a conversation with a human.

{chat_history}

Human: {human_input}

AI:"""

prompt = PromptTemplate(input_variables=["chat_history", "human_input"], template=template)

memory = MotorheadMemory(

api_key="API_KEY",

client_id="CLIENT_ID",

session_id="langchain-1",

memory_key="chat_history",

)

await memory.init();

llm = OpenAI(temperature=0, openai_api_key=openai_api_key)

llm_chain = LLMChain(llm=llm, prompt=prompt, memory=memory)

llm_chain.run("Hello, I'm Motorhead.")

*** Response ***

Hi Motorhead, it's nice to meet you. Is there something specific you want to talk about?Use the chain's run() method to advance the conversation in the notebook.

llm_chain.run("What's my name?")

*** Response ***

Your name is Motorhead. Is there anything else I can help you with?To test the self-hosted option, just replace the api_key and client_id parameters with url (the Railway instance) when instantiating the class; excerpt below.

memory = MotorheadMemory(

url="https://motorhead.up.railway.app",

session_id="langchain-1",

memory_key="chat_history",

)