Persistent AI Chat Bots with LangChain and Steamship

A brief guide to deploying persistent-memory AI chatbots with LangChain and Steamship.

In my previous post, I covered a simple deployment of an AI chat bot on Vercel. It was fairly basic though; in this tutorial, I'll cover an approach wherein the chatbot remembers the context from previous questions and maintains a persistent chat history for each user across sessions. To achieve this outcome, we'll use LangChain and Steamship.

What is LangChain?

LangChain is an open-source library created to aid the development of applications leveraging the power of large language models (LLMs). Originally written in Python, LangChain now has a Javascript implementation too. It can be used for chatbots, text summarisation, data generation, question answering, and more. Broadly, it supports the following modules:

- Prompts: management of the text that LLMs take as input.

- LLMs: API wrapper around the underlying LLMs.

- Document loaders: interface for loading documents and other data sources.

- Utils: utilities for computation or interaction with other sources like embeddings, search engines etc.

- Chains: sequence of calls to LLMs and utils; the true value of LangChain.

- Indexes: best practices for combining your own data.

- Agents: use LLMs to decide which actions to take and in which order.

- Memory: state persistence between calls of an agent or chain.

What is Steamship?

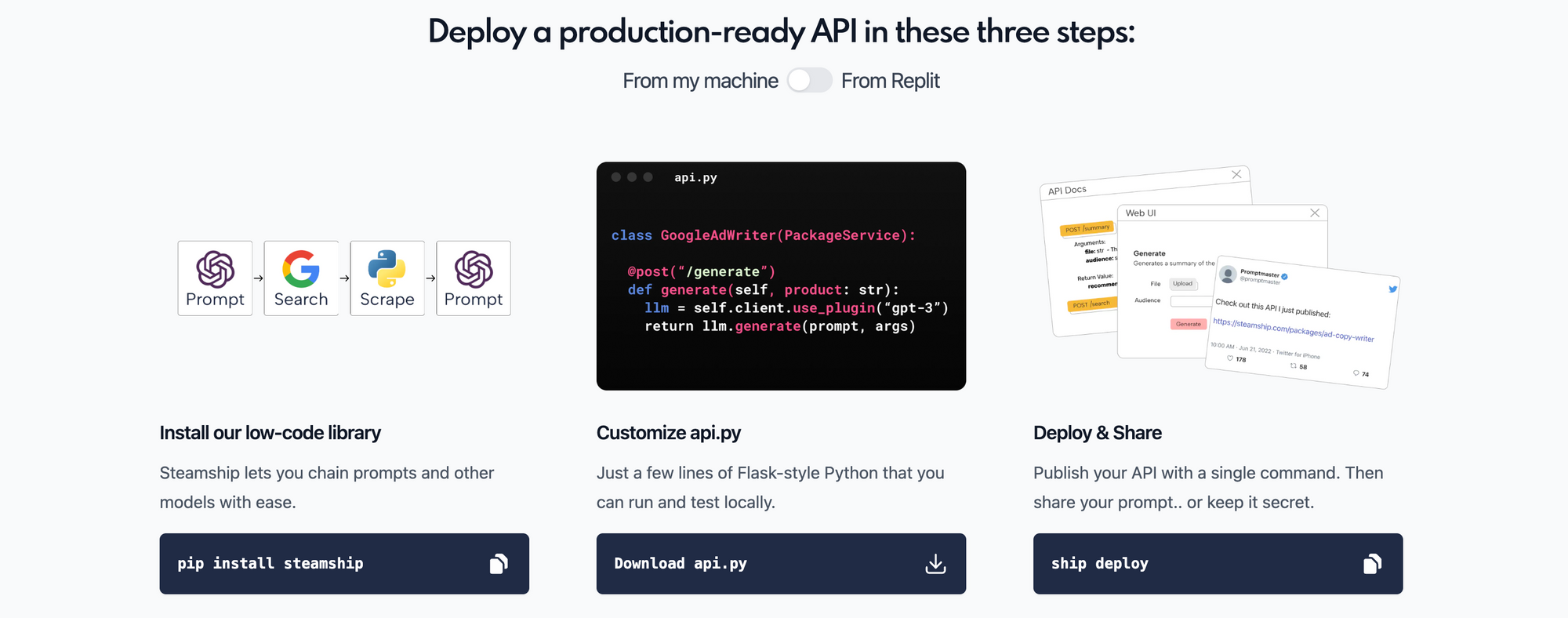

Steamship is a managed backend for hosting AI services, allowing you to turn clever prompts into instant API endpoints. It integrates with major model providers like OpenAI, Hugging Face, Co:here and more, and allows you to create scalable interfaces without actually managing the infrastructure. Steamship's LangChain wrappers add managed cloud hosting to the underlying capabilities, making it really easy to publish LangChain endpoints. Steamship has yet to release their pricing, but their introductory offer of 500 API calls and 200K characters / 40 MB of LLM usage should be sufficient for our walk-through today.

Head over to Steamship and create an account. Once you log in, navigate to Account > API Key, and note down the API key - we'll use it in the next section to deploy their AI chatbot template on Vercel.

Create an App Package using Steamship API

Steamship has just released a few ready-to-use templates for AI-powered demos. One such template is the AI Chat GPT-3 demo, which extends the original starter template from Vercel, and adds persistent memory support. Feel free to use your dev machine here - I'll use a disposable DigitalOcean droplet for this walk-through instead. Run the following commands to deploy the Steamship stack.

# Update the package index and the installed packages

apt update && apt dist-upgrade && apt autoremove

# Confirm that python3 is installed

python3 --version

# Install pip, the standard package manager for Python

apt install python3-pip

# Install nodejs and npm, the standard package manager for nodejs

apt install npm

# Upgrade node to the latest release

npm install -g n

n latest

# Create an example Next.js app (specify package name: ai-chatgpt)

npx create-next-app --example https://github.com/steamship-core/vercel-examples/tree/main/ai-chatgpt

# Install Steamship and LangChain packages

cd ai-chatgpt

pip install steamship steamship-langchainNext, add the Steamship API key to the configuration file.

# Create steamship.json file, or update if it exists

nano ~/.steamship.json

# Add the Steamship API key as follows, and save the file

{

"apiKey": "<STEAMSHIP_API_KEY>"

}Test your Steamship credentials using the login command; since we are running this command on a headless server, it will use the API key instead of initiating a browser-based authentication. If you are following along on your dev machine, the latter should suffice.

# Login to Steamship and deploy the app from the project's root folder

cd steamship

ship login

ship deploy

# You'll be prompted for a couple of inputs

What handle would you like to use for your package? Valid characters are a-z and -: <specify package name here, and press Enter>

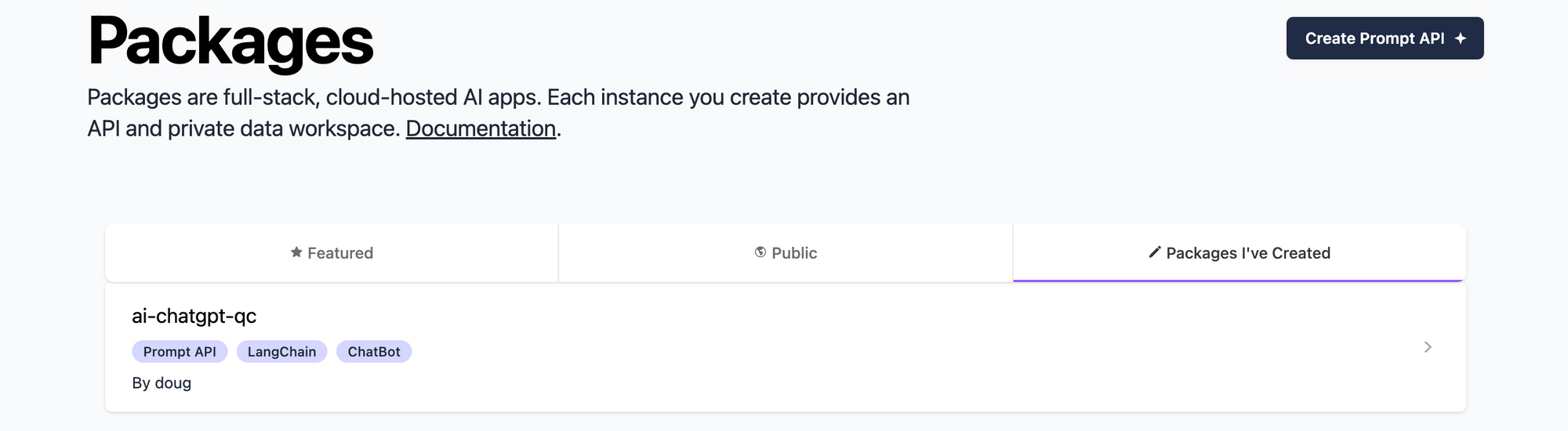

What should the new version be? Valid characters are a-z, 0-9, . and - [1.0.0]: <accept default or change, and press Enter>The package should instantly get created and be available on Steamship under the Packages I've Created tab.

Deploy AI Chatbot using Steamship Template on Vercel

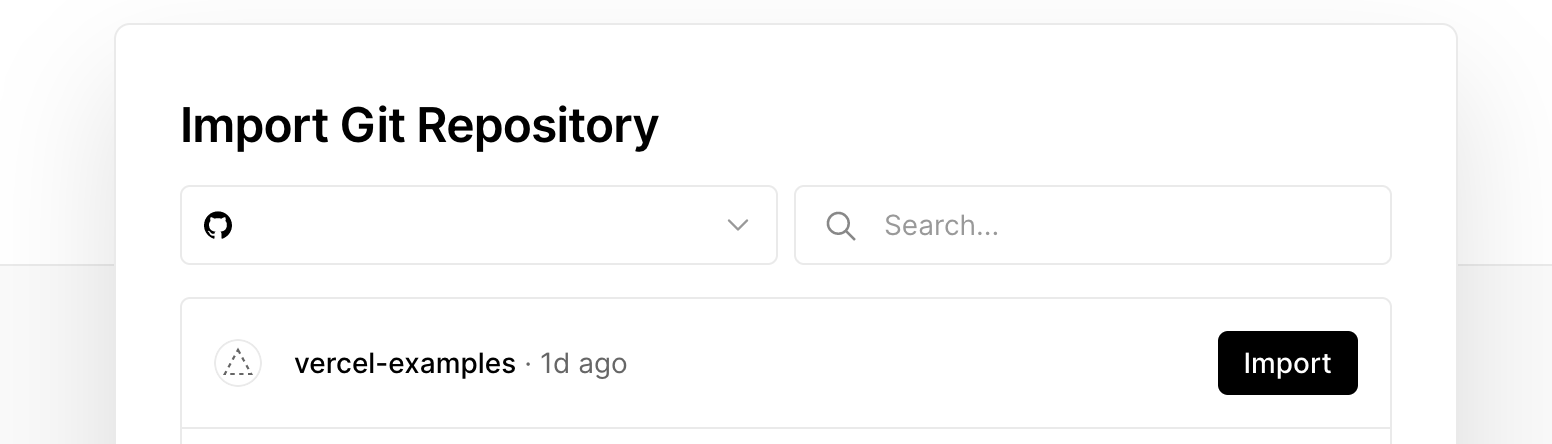

Log into your GitHub account, and fork the AI Chat GPT-3 repository. If you don't already have a Vercel account, create one, and connect it your GitHub repository. Click Add New > Project, and import the vercel-examples repository that you just forked.

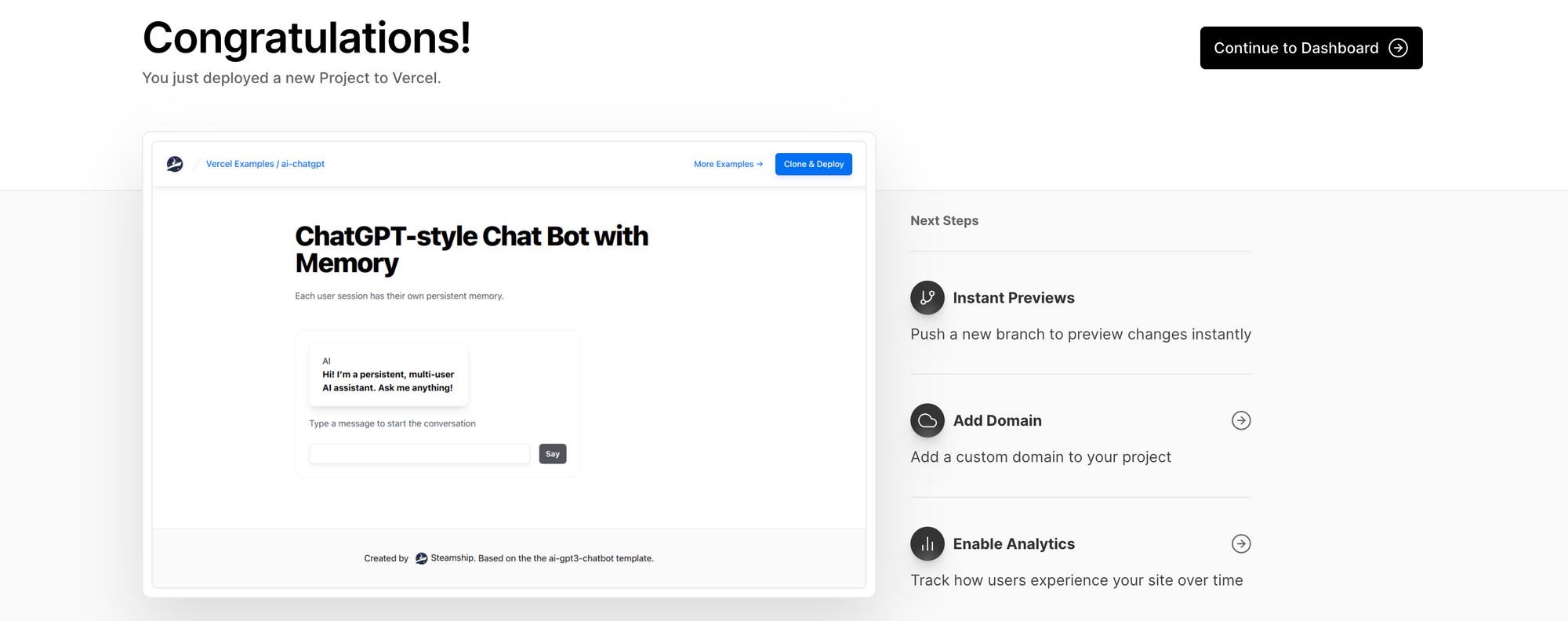

Specify the Project Name, change the Root Directory to the ai-chatgpt folder, add the following environment variables, and click Deploy.

STEAMSHIP_API_KEY: your Steamship API keySTEAMSHIP_PACKAGE_HANDLE: the package name you specified while deploying the Steamship stack above

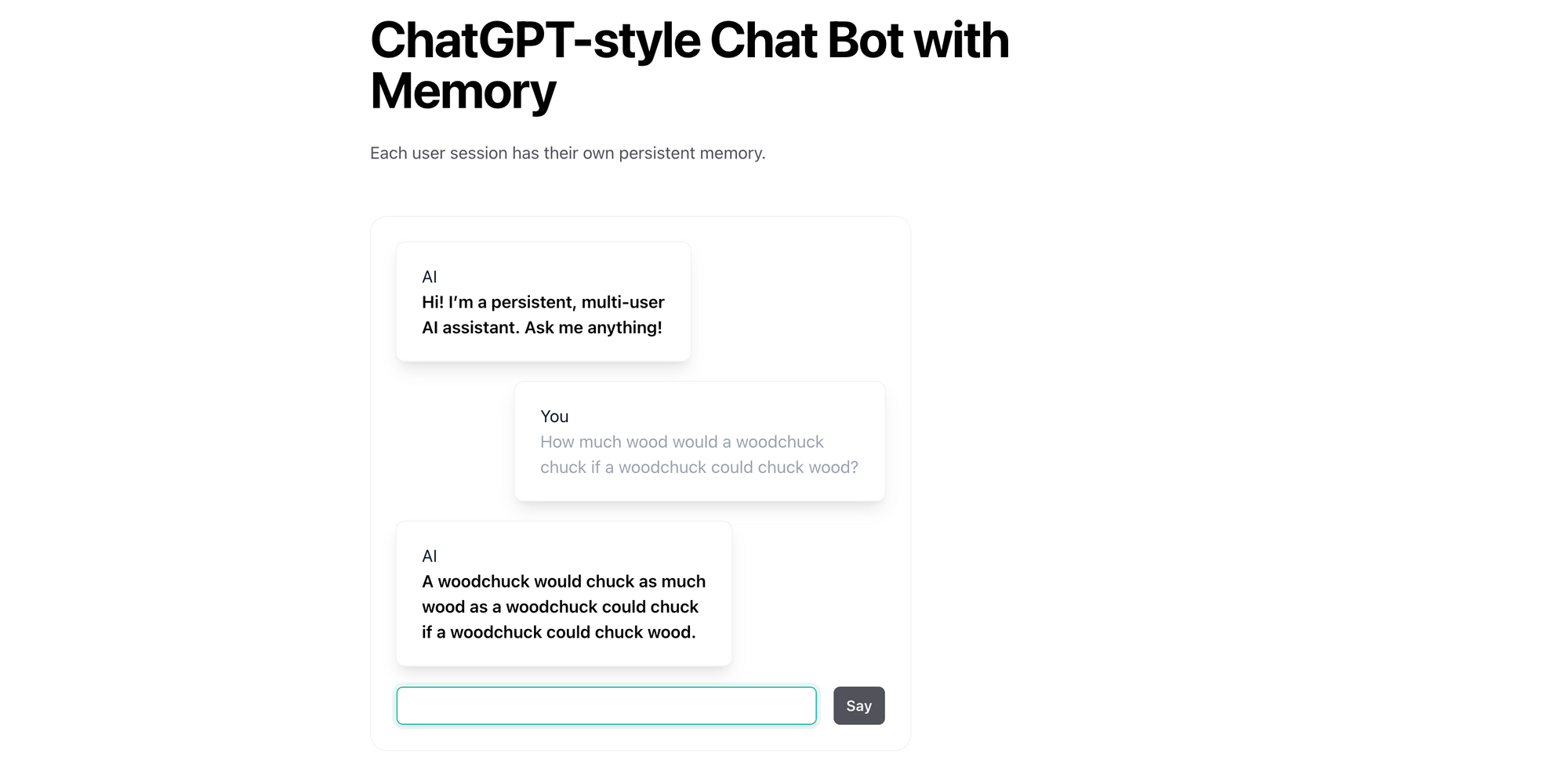

The Vercel deployment starts instantly, builds the image, runs a few checks, and makes the chatbot available publicly on a temporary domain. If you want to host the chatbot on a custom domain, see this guide.

Click on the deployment URL to launch your chatbot. You can converse normally, as you would with ChatGPT, and the chatbot remembers the context from previous questions and maintains a persistent chat history for each user across sessions. Since this chatbot uses Steamship for orchestration instead of making direct API calls to OpenAI, you skip usage costs for the latter. It remains to be seen whether there will be a market for such AI orchestration middleware in the future, or whether it gets subsumed by the model providers or other layers of an AI application stack. For now though, with each iteration, my interest gets piqued further, and I continue to explore the generative AI ecosystem.