Open-Source LLM Observability and Analytics with Langfuse

A brief on observability and analytics needs for large language models (LLMs), and how to address them using Langfuse.

As the demand for large language models (LLMs) continues to grow rapidly, the need for observability and analytics grows alongside. Understanding applications traces in production environments, and getting a granular view on quality, cost and latency of model usage, are increasingly important for the success and longevity of generative artificial intelligence (AI) applications. In a previous post, we discussed Helicone, an open-source LLM observability platform. Today, we'll explore Langfuse, another project attempting to conquer this nascent space.

What is Langfuse?

Langfuse is an open-source observability and analytics platform for LLM applications. It helps to trace and debug LLM applications, and understand how changes to any step impact overall performance. In preview, Langfuse also provides prebuilt analytics to help track token usage and costs, track user feedback, and monitor and improve latency at each step of the application. Langfuse integrates with applications using API and SDKs (for Python and JS/TS), and also integrates with LangChain, the popular LLM development framework. Langfuse can be deployed locally, self-hosted on popular platforms like DigitalOcean or Railway, or consumed using the Langfuse Cloud offering.

Deploy Langfuse using One-Click Starter on Railway

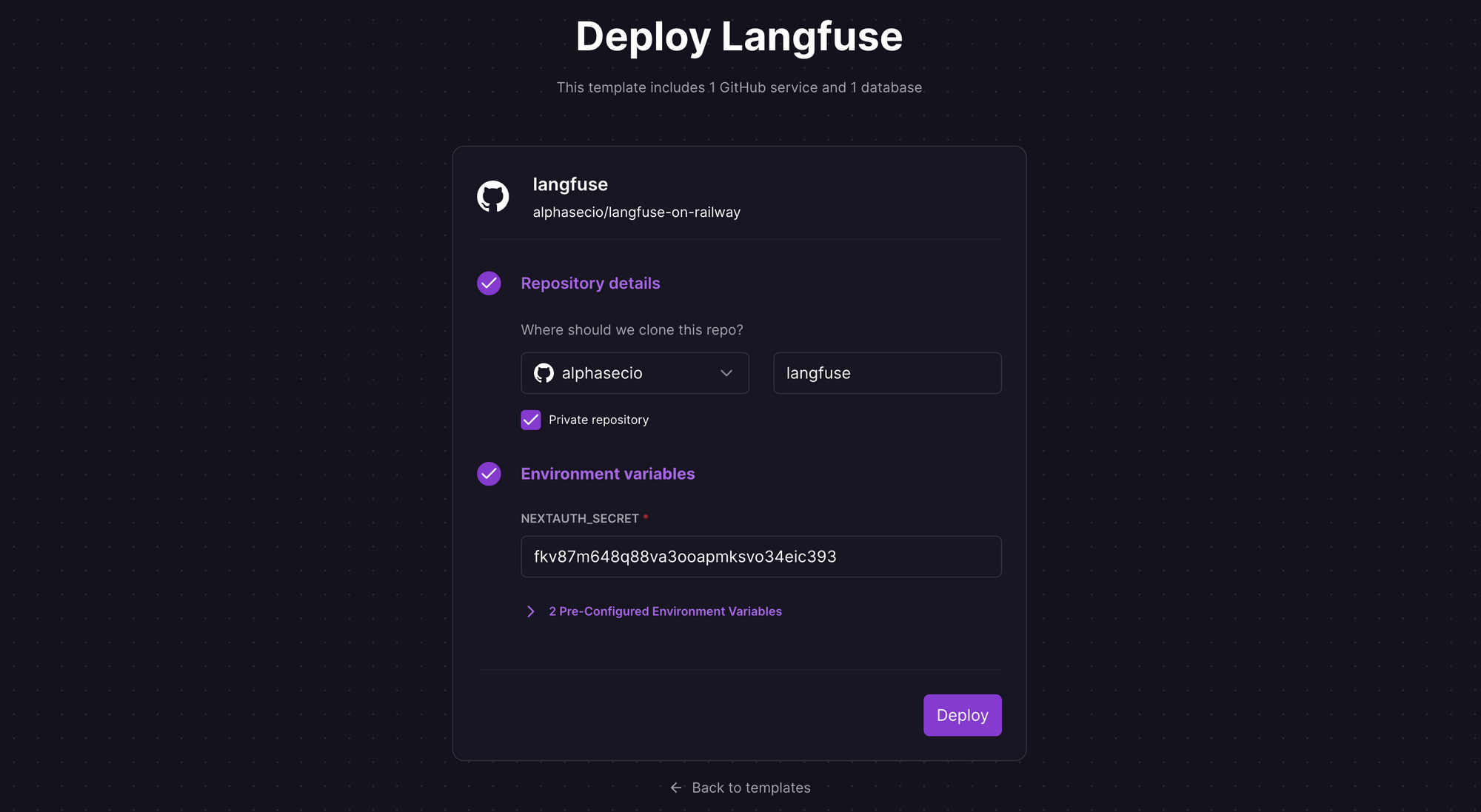

In this post, we're going to self-host the Langfuse server using Docker on Railway, a modern app hosting platform that makes it easy to deploy production-ready apps quickly. If you don't already have an account, sign up using GitHub, and click Authorize Railway App when redirected. Review and agree to Railway's Terms of Service and Fair Use Policy if prompted. Railway does not offer an always-free plan anymore, but the free trial is good enough to try this. Launch the Langfuse one-click starter template (or click the button below) to deploy the server instantly on Railway.

You'll be given an opportunity to change the default repository name and set it private, if you'd like. Provide a value for the NEXTAUTH_SECRET, SALT, and NEXTAUTH_URL environment variables, and click Deploy; the deployment will kick off immediately.

Once the deployment completes, the Langfuse server will be available at a default xxx.up.railway.app domain - launch this URL to access the Langfuse UI. If you are interested in setting up a custom domain, I covered it at length in a previous post - see the final section here.

Getting Started with Langfuse

To get started, click the Sign up button, provide the Name, Email and Password, and click Sign up to create an administrative account. On the Get started page, click + New project , provide the Project name and click Create to create a new project.

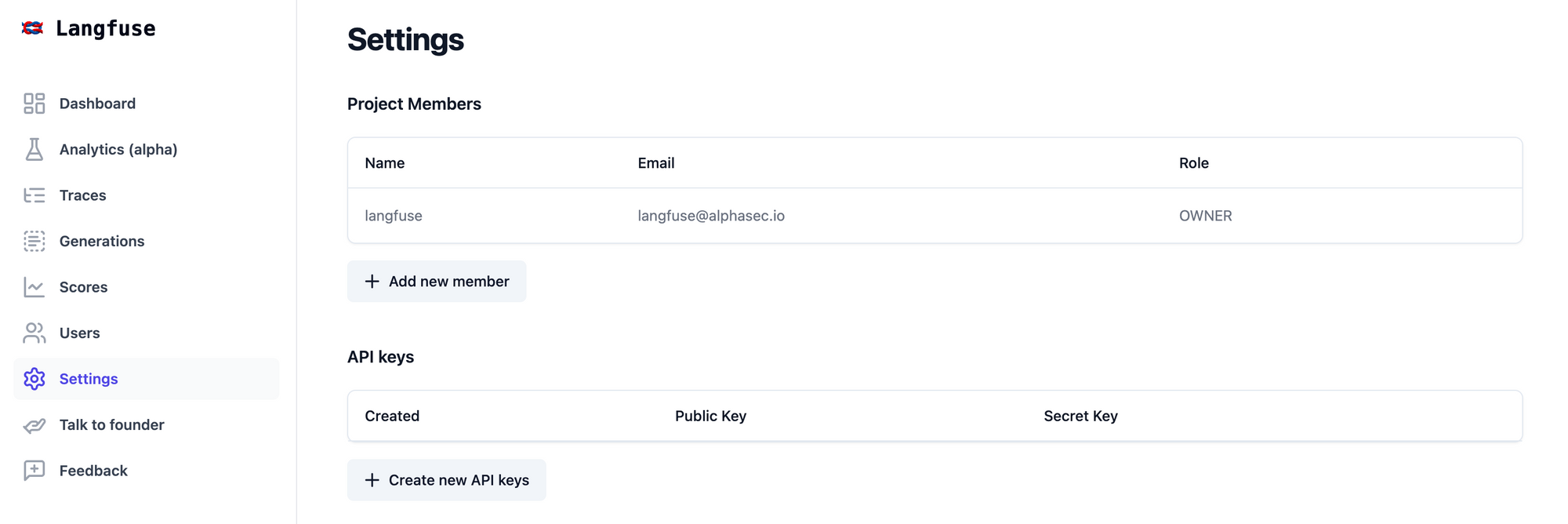

On the Settings page, click Create new API keys to generate a new secret and public key pair. Store these keys securely - you'll need them in your application.

Feel free to explore other parts of the web interface like the dashboard, traces, generations, scores, and so on. Most will be empty now, but we'll revisit them later.

Create a Langfuse Notebook with Google Colab

Google Colaboratory (Colab for short), is a cloud-based platform for data analysis and machine learning. It provides a free Jupyter notebook environment that allows users to write and execute Python code in their web browser, with no setup or configuration required. Create your own notebook using the code below, and click the play button next to each cell to execute the code.

# Install dependencies

%pip install langchain openai langfuse

# Configure required environment variables

ENV_HOST = "https://langfuse-production.up.railway.app"

ENV_SECRET_KEY = "sk-lf-..."

ENV_PUBLIC_KEY = "pk-lf-..."

OPENAI_API_KEY = "sk-..."

# Integrate Langfuse with LangChain Callbacks

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

from langfuse.callback import CallbackHandler

template = """You are a helpful assistant, who is good at general knowledge trivia. Answer the following question succintly.

Question: {question}

Answer:"""

prompt = PromptTemplate(template=template, input_variables=['question'])

question = "Why is the sky blue?"

llm = OpenAI(openai_api_key=OPENAI_API_KEY)

chain = LLMChain(llm=llm, prompt=prompt)

handler = CallbackHandler(ENV_PUBLIC_KEY, ENV_SECRET_KEY, ENV_HOST)

chain.run(question, callbacks=[handler])

handler.langfuse.flush()Model Usage Metrics & Analytics with Langfuse

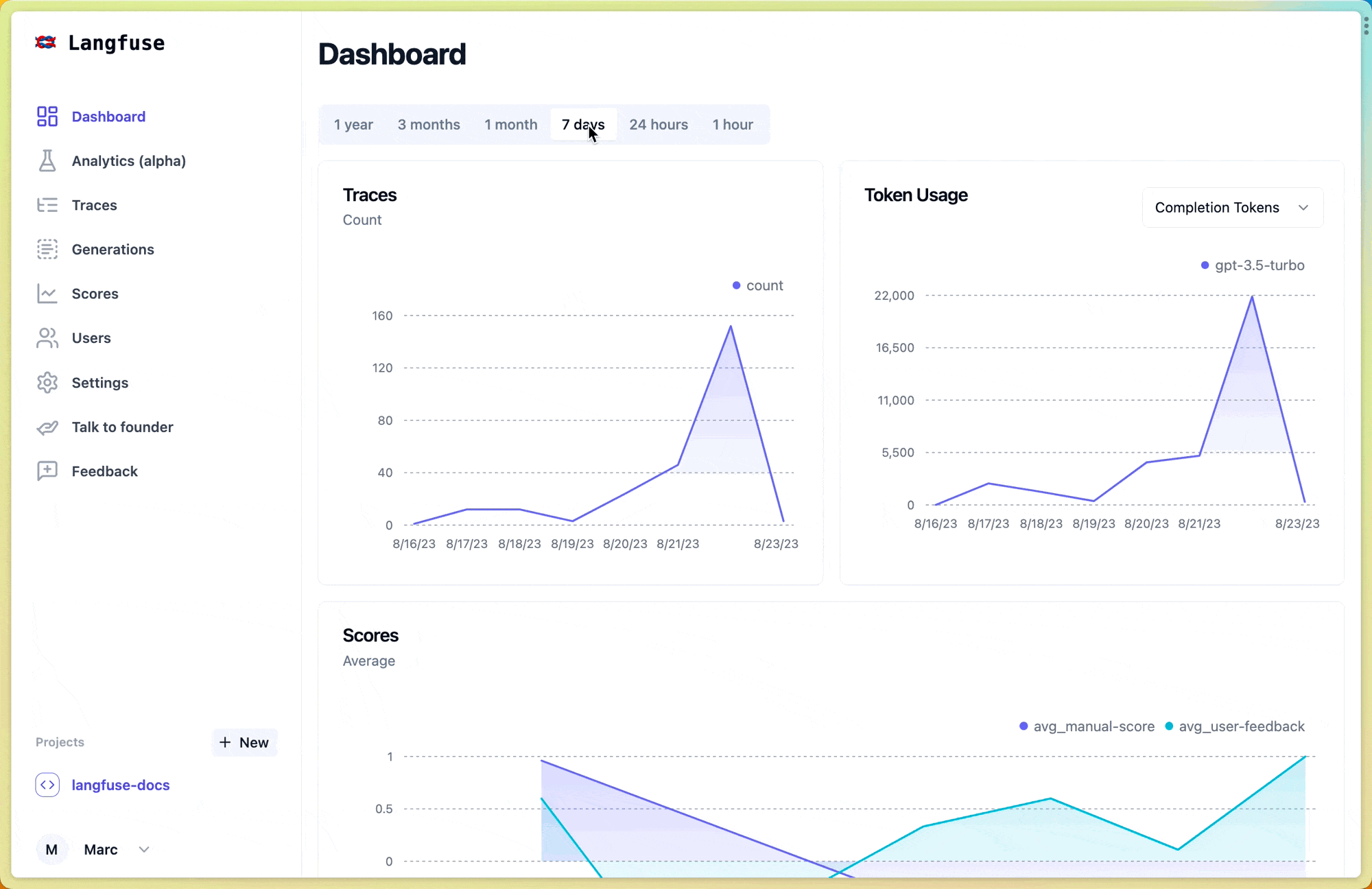

Once you run the notebook in Google Colab, go back to the Langfuse dashboard. Instantly, you'll see it populated with an entry reflecting our notebook execution.

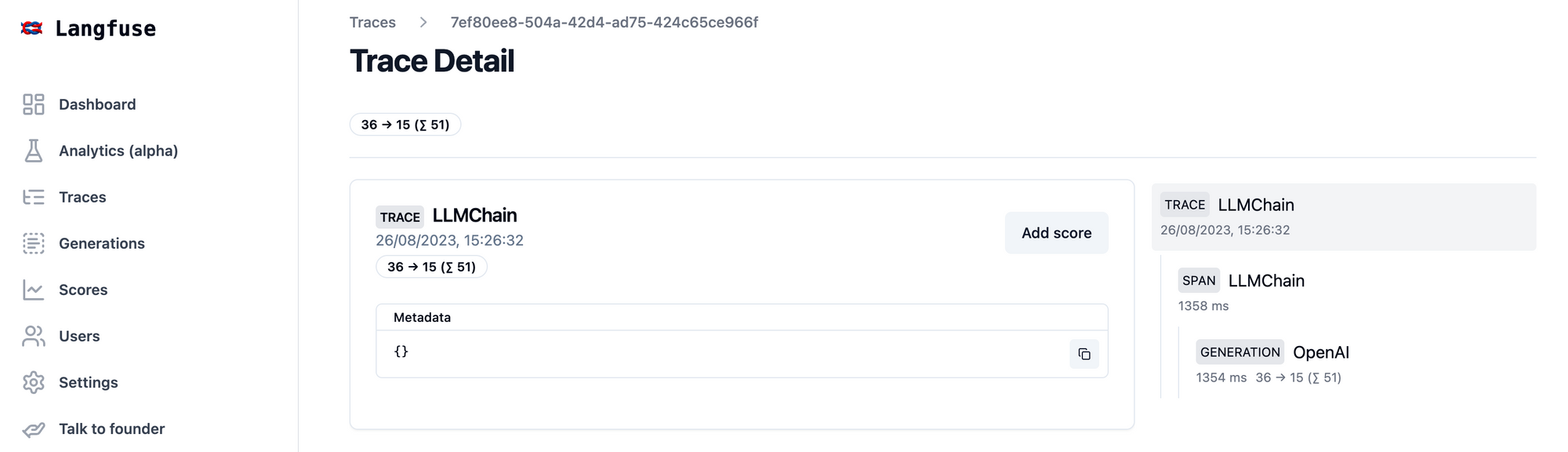

Click on Traces to see the execution trace in detail. This was a simple example, hence you only see a couple of steps on the right - click on each to explore. You can add scores to evaluate individual executions or traces. The scores assess aspects such as quality, tonality, accuracy, completeness, and relevance.

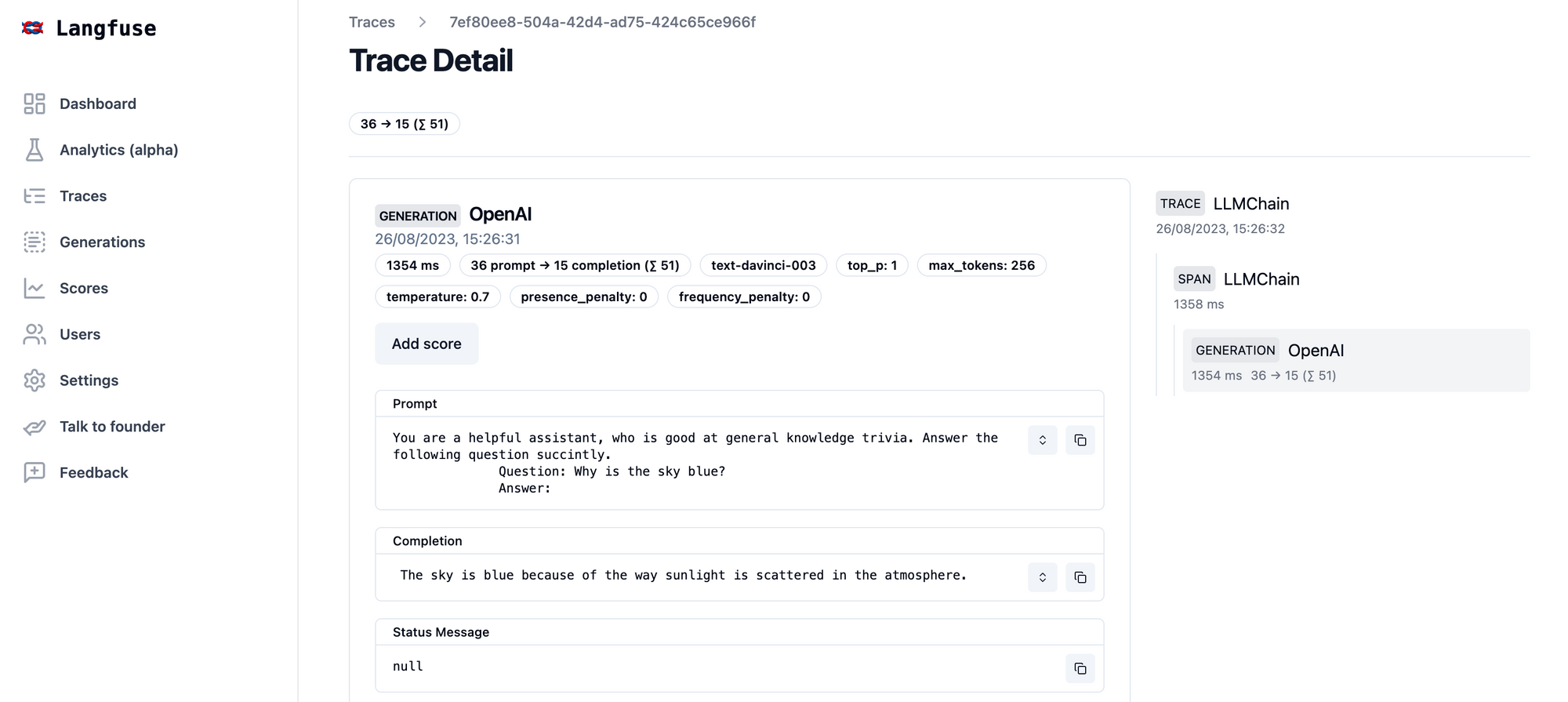

Now click on the Generation step in the previous figure, or click on Generations tab to explore the actual model usage. For ingested generations without usage attributes, Langfuse automatically calculates the token amounts.

Langfuse Analytics is currently in alpha, and requires early access from the team. Overall, Langfuse is still a fairly nascent project but one with promise in the (slowly getting crowded) LLM observability space.