Open-Source ChatGPT UI Alternative with Open WebUI

A brief guide to deploying Open WebUI, an open-source ChatGPT UI alternative, on Railway.

The success of ChatGPT has spawned a multitude of copycat projects. Some look to ride the large language model (LLM) hype without being sufficiently differentiated, but a handful of them genuinely extend the core capabilities with innovative and user-friendly features. In a previous post, I covered Chatbot UI, but there's also ChatGPT Next Web and a bunch of other open-source alternatives. Today, we'll explore Open WebUI, and walk through self-hosting it easily on Railway.

What is Open WebUI?

Open WebUI (formerly Ollama WebUI) is a user-friendly, self-hosted web interface designed to operate entirely offline. It supports various LLM runners like Ollama and OpenAI-compatible APIs. Since launch, it has rapidly added a number of exciting features:

- Responsive web interface, works well on both desktop and mobile.

- Easy setup using Docker images, with a variety of themes available.

- Enhanced code readability with syntax highlighting feature.

- Retrieval Augmented Generation (RAG) support for document integration.

- Web browsing capability, prompt presets and conversation tagging.

- Multi-model, multi-modal support, with voice input.

- Chat management including sharing, collaboration, history and export.

- Many other features (see the full list here).

Deploy Open WebUI using One-Click Starter on Railway

In this post, we'll self-host Open WebUI on Railway, a modern app hosting platform that makes it easy to deploy production-ready apps quickly. If you don't already have an account, sign up using GitHub, and click Authorize Railway App when redirected. Review and agree to Railway's Terms of Service and Fair Use Policy if prompted. Railway does not offer an always-free plan anymore, but the free trial is good enough to try this. Launch the Open WebUI one-click starter template (or click the button below) to deploy it instantly on Railway.

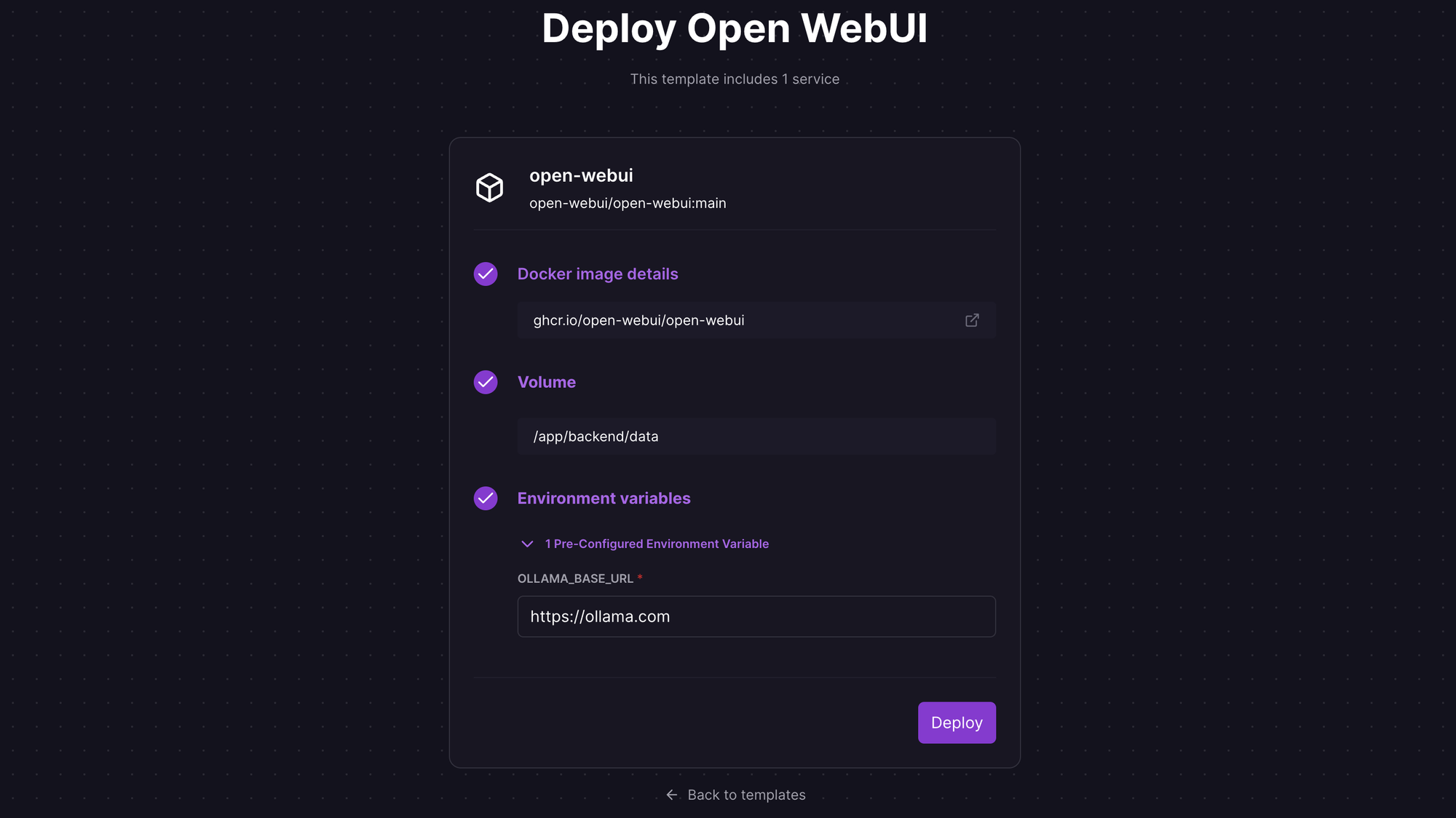

We're using the open-webui:main image for deployment; review the default settings and click Deploy; the deployment will kick off immediately. The OLLAMA_BASE_URL variable has a placeholder value, and must be replaced with the actual URL where Ollama is installed, if you plan to use it. If you only plan to use OpenAI though, you can add an OPENAI_API_KEY variable or provide the API key from the web interface upon deployment.

Once the deployment completes, the Open WebUI sign in / sign up page will be available at a default xxx.up.railway.app domain - launch this URL to access the web interface. If you are interested in setting up a custom domain, I covered it at length in a previous post - see the final section here.

Explore Open WebUI Interface

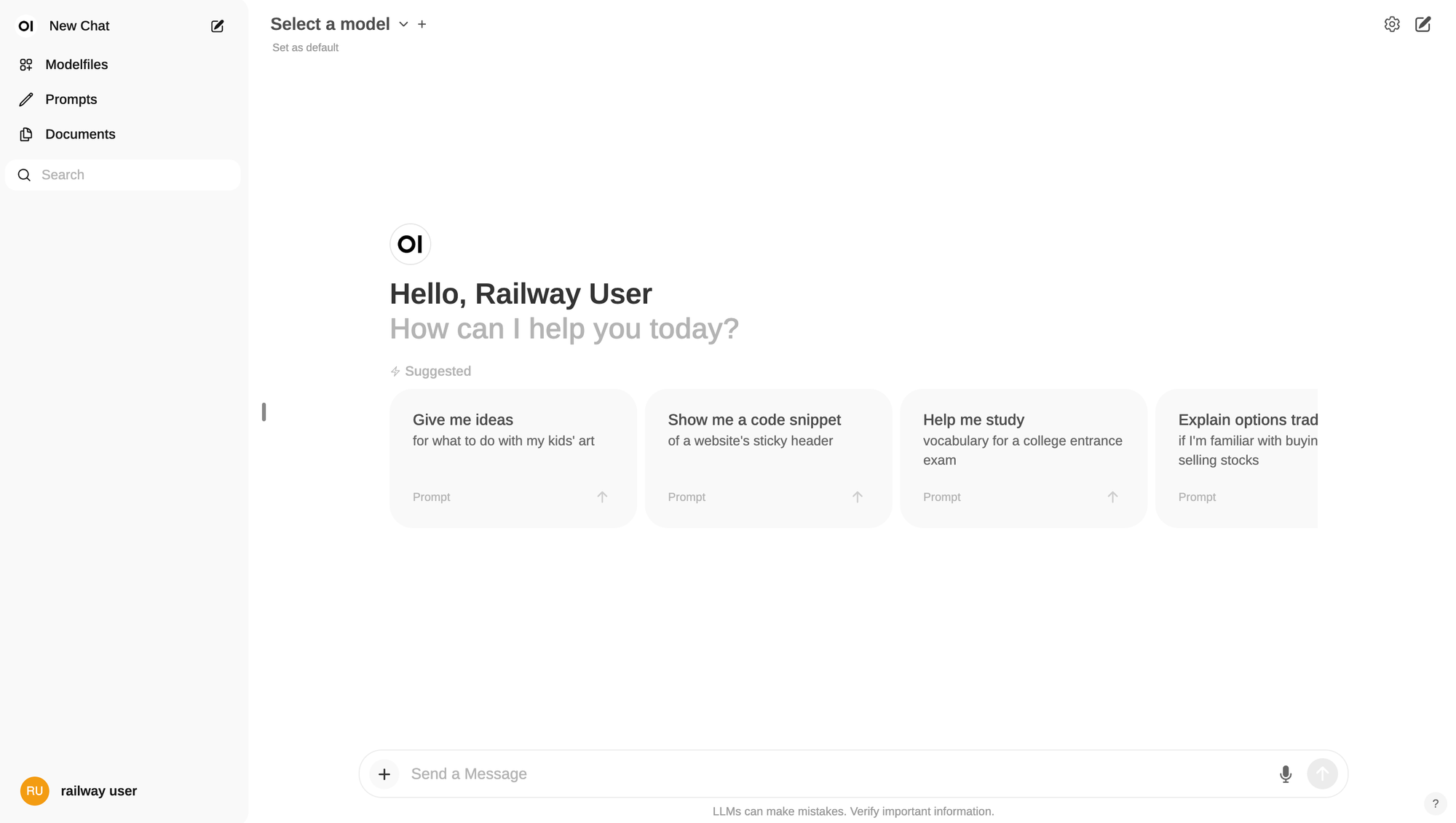

Once you create an account, you'll be redirected to the Open WebUI dashboard.

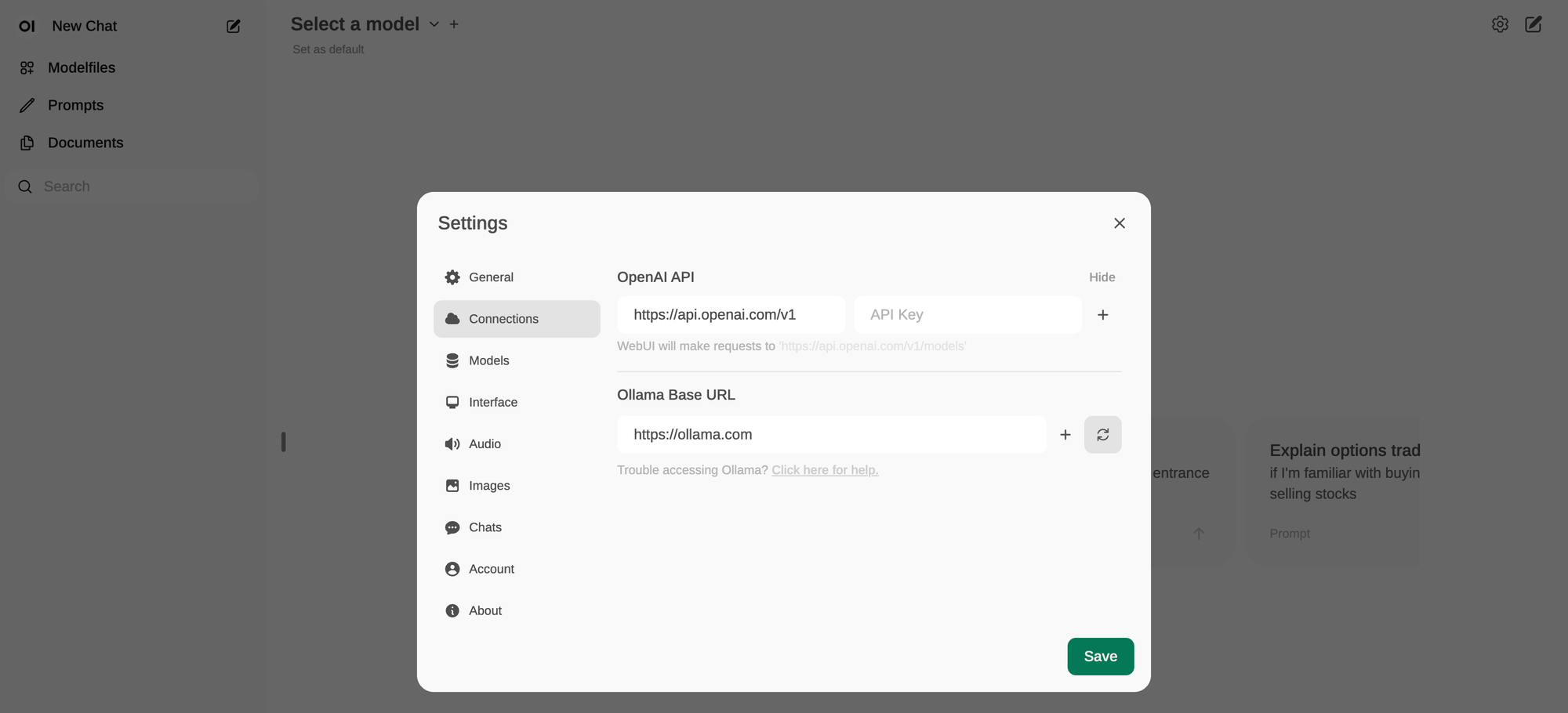

If you added the OpenAI API key during deployment, you should be able to use the interface immediately. If you didn't do so, click on the settings icon at the top-right corner, click Connections and provide the API key. You can also configure your Ollama base URL here.

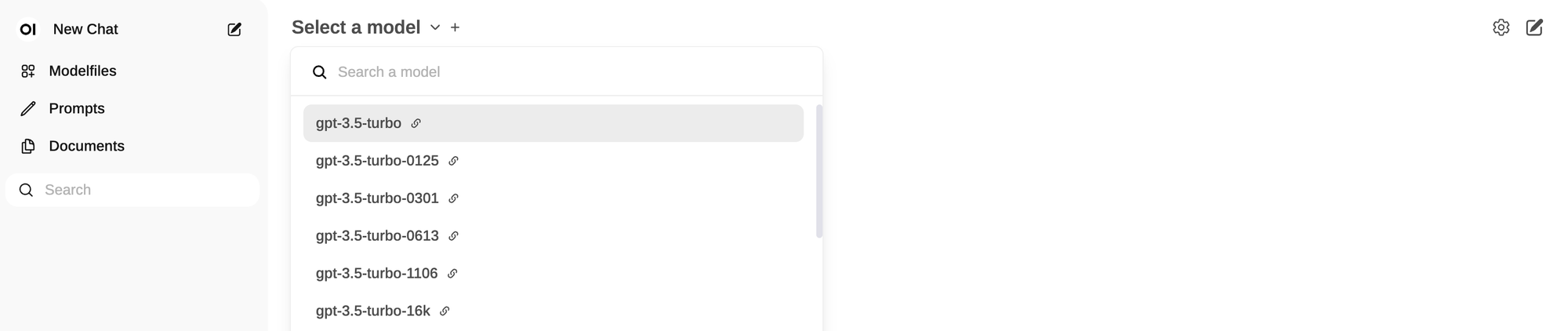

Once the connections have been set up, the associated models will show up in the Select a model dropdown list. Pick one, say gpt-3.5-turbo, and proceed.

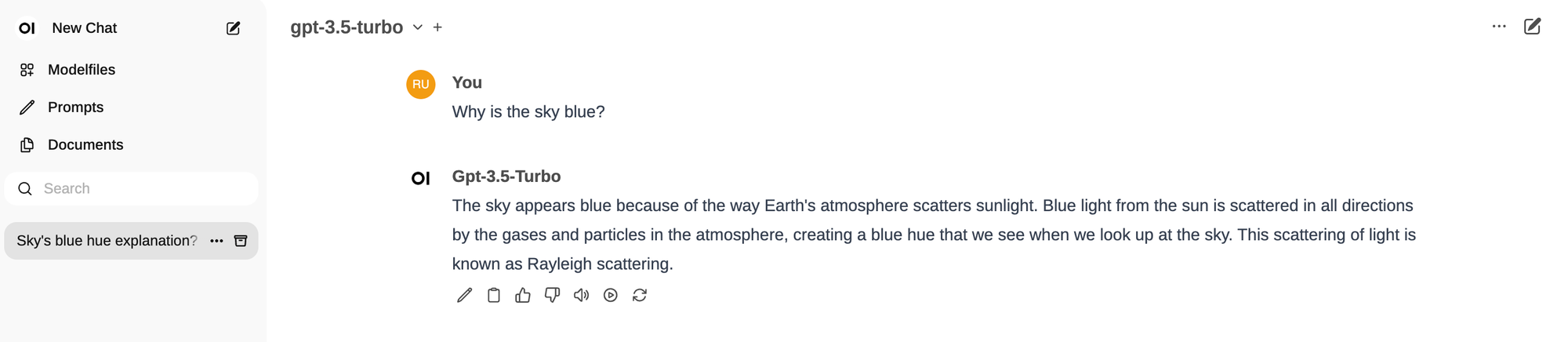

You can use the interface in text completion or chat mode. You can also save the selected model as default for future queries.

As mentioned earlier, Open WebUI has an extensive set of features, well beyond the standard ChatGPT capabilities. Features like models, prompts and documents are beyond the scope of this post, but feel free to explore them on your own.