Blinkist for URLs with LlamaIndex and OpenAI

A brief guide to AI-generated web URL summaries with LlamaIndex and OpenAI.

In a previous post, we explored the generation of AI summaries for web URLs using OpenAI large-language models (LLMs) and the LangChain LLM framework. Today, we'll do something similar but, this time, we'll explore a competing open-source LLM framework, namely LlamaIndex.

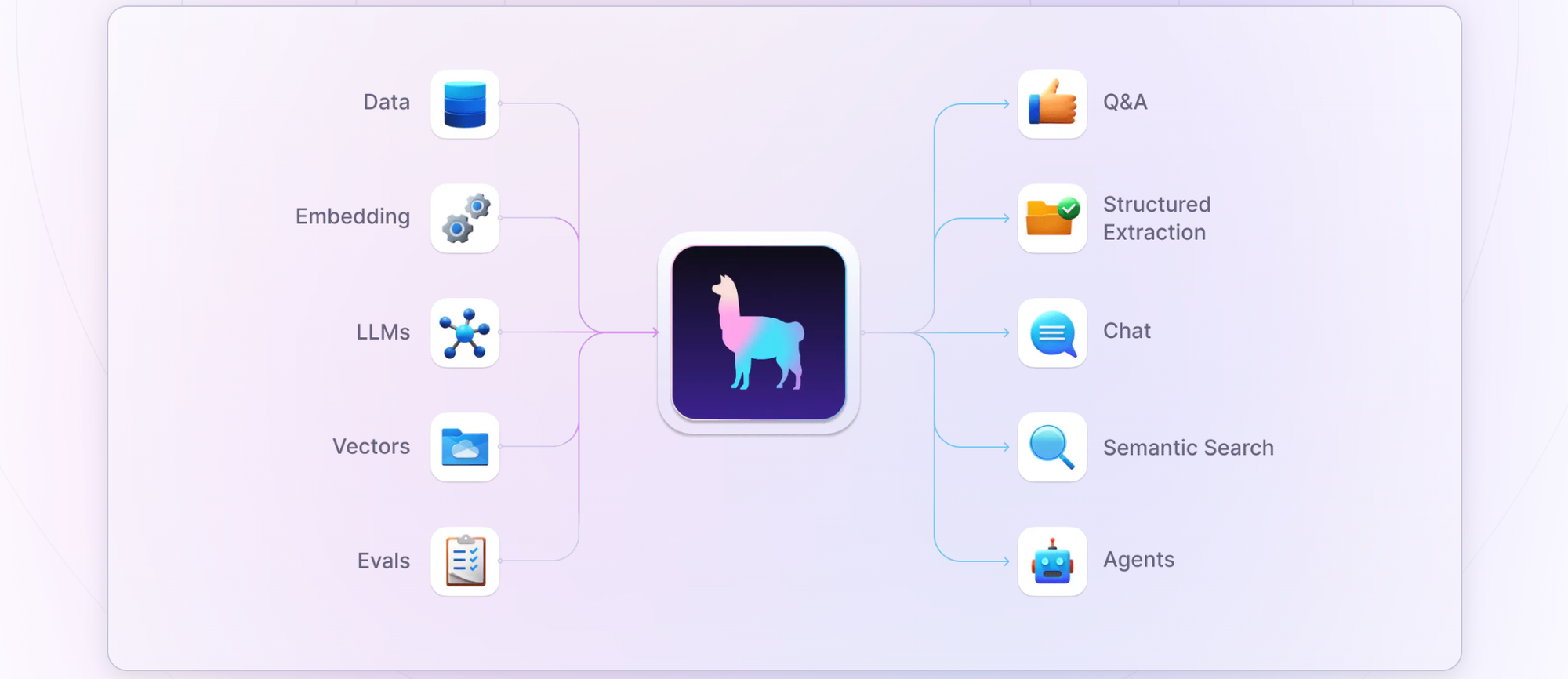

What is LlamaIndex?

LlamaIndex is an open-source project that provides a simple interface between LLMs and external data sources like APIs, PDFs, SQL etc. It provides indices over structured and unstructured data, helping to abstract away the differences across data sources. When working with LLM applications, LlamaIndex helps to ingest, parse, index, and process your data in conjunction with context added from external data sources as necessary.

LlamaIndex offers tools to significantly enhance the capabilities of LLMs:

- Data connectors to ingest data from various sources in different formats

- Data indexes to structure data in vector representations for LLMs to consume

- Query engines as interfaces for question-answering on your data

- Chat engines as interfaces for conversational interactions with data

- Agents as complex, multi-step workers, with functions and API integrations

- Observability/Evaluation capabilities to continuously monitor your app

Use Streamlit and LlamaIndex to Generate URL Summaries

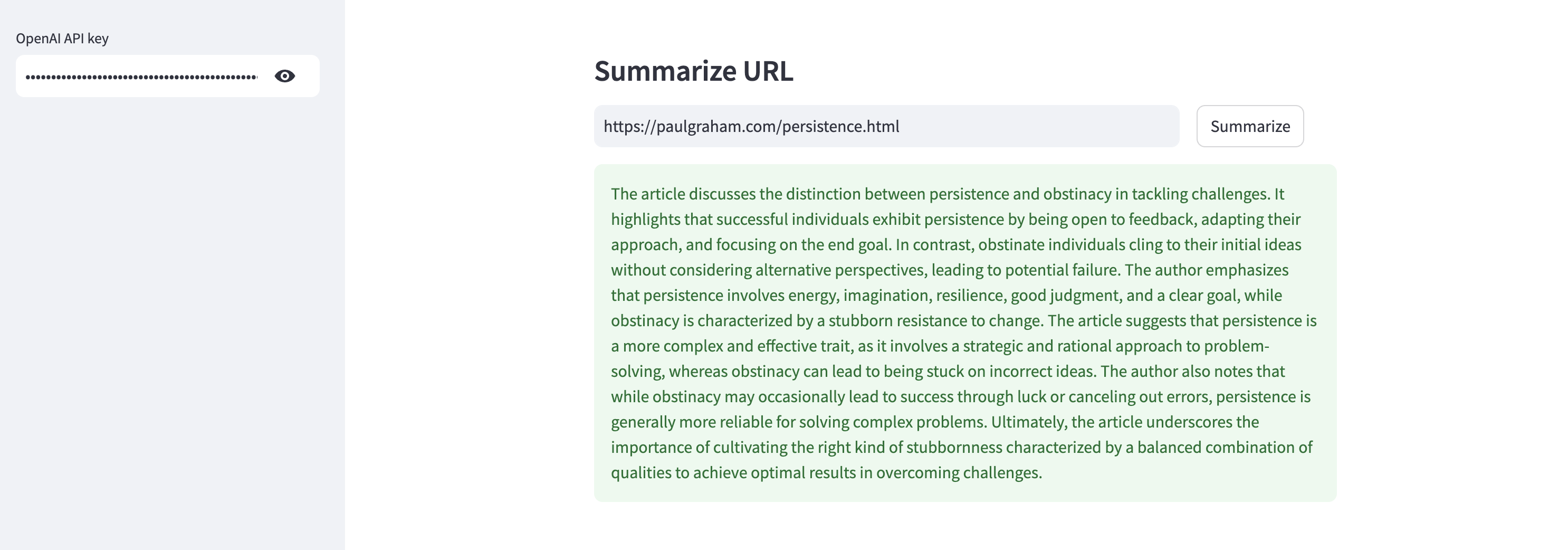

To generate web URL summaries, we'll use the SimpleWebPageReader and SummaryIndex classes to retrieve URL content and prepare as documents. Since we are using the query_engine module, we can chat with the contents of this URL too. But, here, I'll pre-define the prompt to simply generate a concise summary instead. The OpenAI integration is transparent to the user - you just need to provide an OpenAI API key, which will be used by LlamaIndex automatically in the background. We'll wrap the whole interaction in a Streamlit web application for ease of development and deployment. Here's my streamlit_app.py file describing the entire flow; you can find the complete source code on GitHub.

import os, validators, streamlit as st

from llama_index.core import SummaryIndex, Settings

from llama_index.llms.openai import OpenAI

from llama_index.readers.web import SimpleWebPageReader

Settings.llm = OpenAI(temperature=0.2, model="gpt-3.5-turbo") # change model to gpt-4o-mini, when available

# Streamlit app config

st.subheader("Summarize URL")

with st.sidebar:

openai_api_key = st.text_input("OpenAI API key", type="password")

col1, col2 = st.columns([4,1])

url = col1.text_input("URL", label_visibility="collapsed")

summarize = col2.button("Summarize")

if summarize:

# Validate inputs

if not openai_api_key.strip() or not url.strip():

st.error("Please provide the missing fields.")

elif not validators.url(url):

st.error("Please provide a valid URL.")

else:

try:

with st.spinner("Please wait..."):

os.environ["OPENAI_API_KEY"] = openai_api_key

documents = SimpleWebPageReader(html_to_text=True).load_data([url])

index = SummaryIndex.from_documents(documents)

query_engine = index.as_query_engine()

summary = query_engine.query("Summarize the article in 200-250 words.")

st.success(summary)

except Exception as e:

st.exception(f"Exception: {e}")Deploy the Streamlit App on Railway

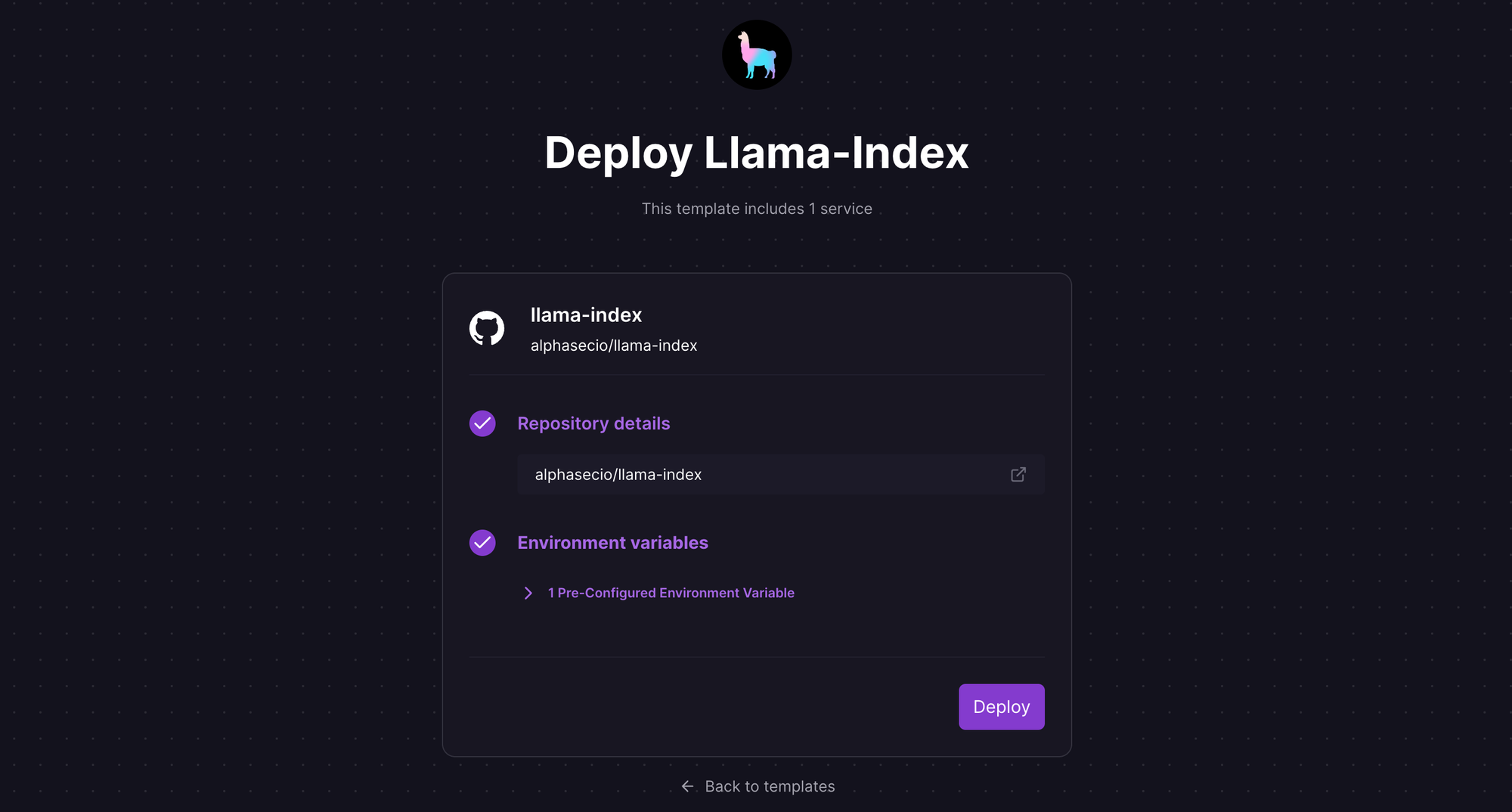

Let's deploy the Streamlit app on Railway, a modern app hosting platform. If you don't already have an account, sign up using GitHub, and click Authorize Railway App when redirected. Review and agree to Railway's Terms of Service and Fair Use Policy if prompted. Launch the Llama-Index one-click starter template (or click the button below) to deploy it instantly on Railway.

Review the settings and click Deploy; the deployment will kick off immediately. This template deploys multiple apps - you can see the details in the template.

Once the deployment completes, the Streamlit apps will be available at default xxx.up.railway.app domains - launch the respective URLs to access the web interfaces. If you are interested in setting up a custom domain, I covered it at length in a previous post - see the final section here.

When the Streamlit app is ready, provide the OpenAI API key and the source URL, and click Summarize - a concise summary will be ready in just a few seconds.

If you'd like to explore other use cases with LlamaIndex e.g. chatting with a PDF document, see this post.