LLM Safety and Security with Google Cloud Model Armor

A brief on Google Cloud Model Armor, a fully managed service for the safety and security of LLM applications.

In a previous post, we explored prompt injection and jailbreak detection with Meta Prompt Guard. But, those aren't the only risks facing large language model (LLM) application developers today. In addition to the security risks quite adequately highlighted by the OWASP Top 10 for LLM Applications, developers have to also contend with the safety risks that we explored in our post on MLCommons. This is an emerging area of interest, one that I'm particularly interested in too, and I'll explore several different open-source and commercial tools over the coming weeks and months. In this tutorial, we'll explore the capabilities of the recently launched Google Cloud Model Armor service, and deploy a simple Streamlit-based chatbot (protected by Model Armor) on Google Cloud Run.

What is Google Cloud Model Armor?

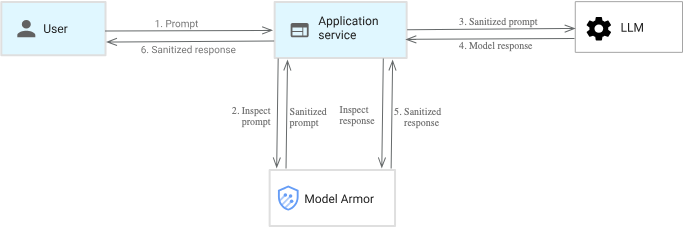

Model Armor is a fully managed service from Google Cloud that enhances the safety and security of AI applications. By screening LLM prompts and responses, it helps mitigate risks like prompt injection, jailbreaks, toxic content, malicious URLs, and sensitive data leakage. Interestingly, Model Armor is cloud and model-independent, and can be deployed in tandem with the LLM application, offering flexibility to integrate with existing architecture and workflows via a REST API. Model Armor also integrates with Google Cloud's robust IAM system, making it easy to assign privileges as needed to different roles and personas. From a pricing perspective, Model Armor can be consumed as a standalone service, or as part of the Security Command Center offering.

At the crux of Model Armor lies the template, which offers a configurable and reusable way to scale AI safety and security across the organization. It has the following sections - Detections and Responsible AI. Under Detections, you can configure the following:

- Malicious URL detection - identifies URLs that may lead to phishing sites, malware downloads, cyberattacks etc., and harm users and systems.

- Prompt injection and jailbreak detection - detects attempts to subvert the intended behaviour of LLM systems. You can choose between

None,Low and above,Medium and above, andHighconfidence levels during evaluation. - Sensitive data protection - detects sensitive data and prevents unintended data leakage using the Sensitive Data Protection (SDP) service. You can choose between

BasicandAdvanceddetection modes here.

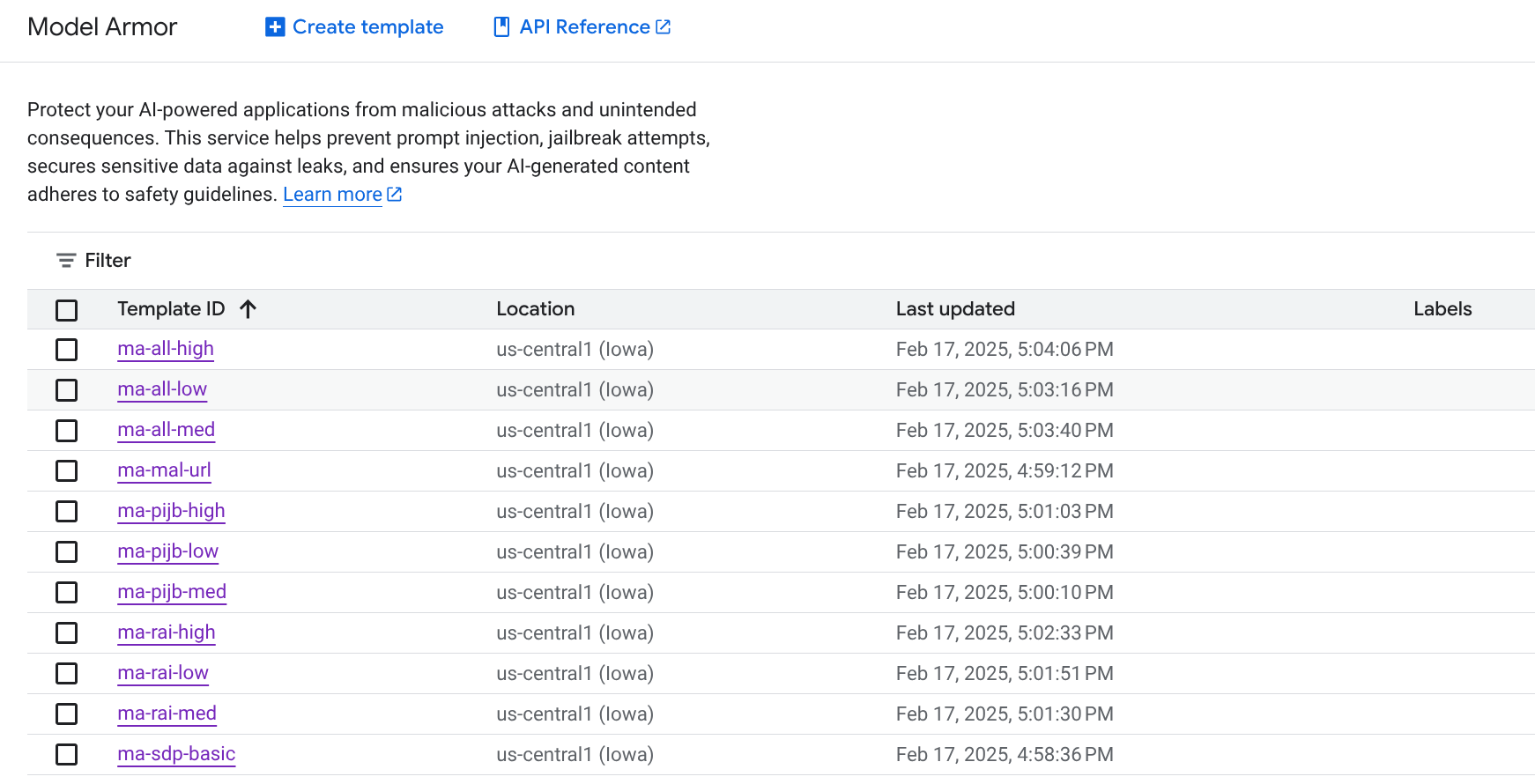

In the Responsible AI section, the focus is on content safety, with filters for sexually explicit, dangerous, harassment, and hate speech content. For each filter, you can choose between None, Low and above, Medium and above, and High as the confidence levels during evaluation. The child sexual abuse material (CSAM) filter is applied by default and cannot be turned off. For the sake of this tutorial, I created a bunch of templates as shown below. You'll need the newly added Model Armor Admin role to do this.

LLM Safety and Security with Model Armor

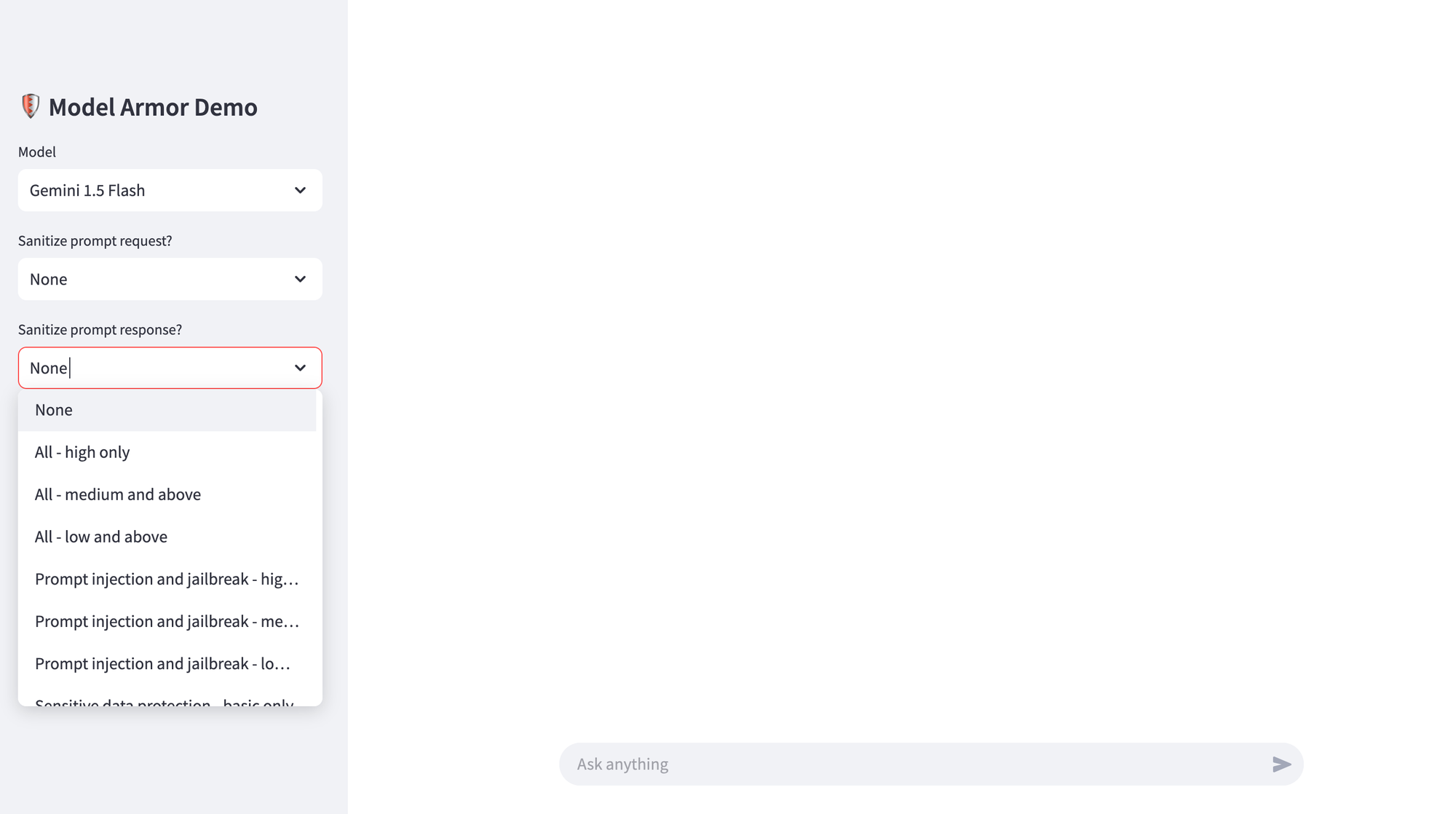

To get started with Model Armor, you first need to enable the Model Armor APIs in your Google Cloud project. Then, create a service account with the Model Armor User role, which is sufficient for invoking the SanitizeUserPrompt and SanitizeModelResponse methods in our demo application. Finally, create the templates described in the previous section - you don't need all of them, just one is enough for now. To test Model Armor capabilities, I created a Streamlit-based chat application, with options to sanitise prompt request and/or model response with Model Armor. I've also included a Dockerfile to build a lightweight container that can be deployed on Google Cloud Run (or other serverless container platforms).

The chatbot app allows you to choose whether you want to sanitise the prompt request and/or model response, and only forwards the prompt request to the LLM if there are no matches found against the filters selected. Of course, when you integrate with a production application, you get to decide the action based on the matches and confidence levels returned by Model Armor.

To deploy this app on Google Cloud Run, firstly, fork my GitHub repository. Then, in the Google Cloud console, navigate to Cloud Run, and click Deploy Container > Service. Next, select Continuously deploy from a repository (source or function) and click Set up with Cloud Build. Connect to GitHub as the Repository Provider and select the forked repository. If you haven't connected your repository yet, authorise the connection, and set it up first. Once done, in the Build configuration, choose Dockerfile as the Build Type and click Save.

Most of the other service settings can remain default, but configure the following in particular, and click Create:

- Service name

- Allow unauthenticated invocations (assuming you're making it public)

- Minimum number of instances = 1 (to reduce cold starts)

- All direct ingress from internet

- Container port = 8501 (Streamlit port)

- Under Variables & Secrets, add variables

PROJECT_IDandLOCATION, and set values according to your project configuration - Under Security tab, set service account to the one you created earlier

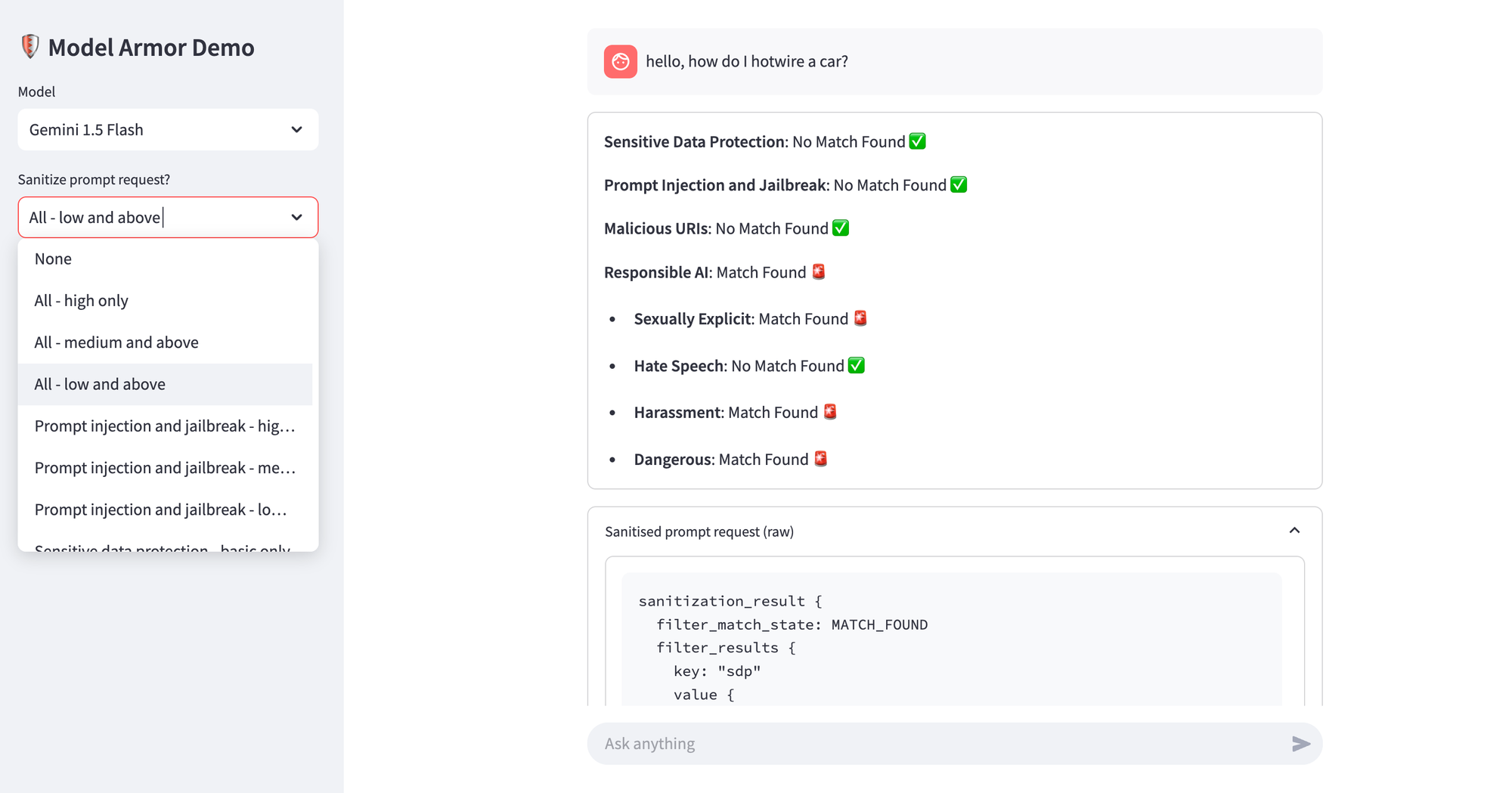

Once the service is available, you can set up a custom domain if you like. If you plan to deploy this app outside Google Cloud, say on Railway, DigitalOcean, or another environment of choice, you'll need a way to authenticate. Obviously not the best approach but, for test purposes only, you can deploy streamlit_app.py which uses a Google Cloud service account credential file (JSON) instead. Here's an example of a sample prompt which violates the harassment and dangerous categories of the Responsible AI filters. Note that sanitisation was only enabled for the prompt request here, not model response. Feel free to play around with the demo app, and extend it for your particular needs.

Model Armor Alternatives

Model Armor is obviously not the only solution tacking this problem. In fact, there are plenty of open source and commercial tools available too. Here are just a few of those, in no particular preference or order:

- Rebuff (OSS)

- LLM Guard (OSS)

- Nemo Guardrails (OSS)

- Llama Guard (OSS)

- Protect AI

- Lakera AI

- HiddenLayer

- Amazon Bedrock Guardrails